update 2022:

next to the good old (non-destructive if used correctly) “dd” is still the way to go for benchmarking harddisks under GNU Linux.

a simpler one is “gnome-disk-utility”

also interesting but not very simple in installation & usage (complicated! UNIX: KISS! KEEP IT SIMPLE!) https://openbenchmarking.org/test/pts/fio-1.12.0

update:

while this benchmark is still valid, very simple, very cross platform, with a focus on the team cpu (why is there no multi core md5? (putting many many core arm servers at disadvantage) + harddisk (two very important components)

maybe stress-ng is the way to go? https://www.cyberciti.biz/faq/stress-test-linux-unix-server-with-stress-ng/

harddisk performance of real and virtual machines, is of course, important for overall performance of the system.

of course – a system is only as fast – as the slowest part “on the team”.

so a super fast SSD bundled with a super slow single core celeron, will still increase performance ~ +20%.

the most basic harddisk benchmarks:

using fio / dd / python based “small files”

https://dwaves.de/2014/12/10/gnu-linux-sequential-read-write-harddisk-benchmark-small-files-python-harddisk-benchmark/

ntfs vs ext4: performance

ntfs is (currently 2021-09 under Debian 10) 3x times slower than ext4!

because cross OS benchmarks are (mostly) lacking, it is very hard to compare ext4 (on native windows) with ext4 (on native GNU Linux) (slowness of NTFS under GNU Linux could also be a “driver” issue, it just proofs that using NTFS under GNU Linux is possible, but slow).

testing on giada f302:

===== system used ===== Debian 10.9 uname -a Linux IdealLinux2021 4.19.0-16-amd64 #1 SMP Debian 4.19.181-1 (2021-03-19) x86_64 GNU/Linux === show info on cpu === cat /proc/cpuinfo |head processor : 0 vendor_id : GenuineIntel cpu family : 6 model : 78 model name : Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz stepping : 3 microcode : 0xd6 cpu MHz : 754.355 cache size : 3072 KB physical id : 0 === show info on harddisk === Model Number: KINGSTON SKC600512G Firmware Revision: S4200102 Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6, SATA Rev 3.0 ===== mounted EXT4 ===== ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.8087 s, 370 MB/s real 0m5.815s user 0m0.000s sys 0m2.517s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 4.26741 s, 503 MB/s real 0m4.276s user 0m0.000s sys 0m1.624s ========== tidy up remove testfile ========== time /scripts/bench/bench_harddisk.sh; # re run at a 2022-03 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.43294 s, 395 MB/s 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.35569 s, 401 MB/s real 0m10.955s ===== small files python based harddisk + cpu benchmark ===== time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.0119922161102 create files: 9.25402116776 rewrite files: 2.01614999771 read linear: 1.82262396812 read random: 3.44922590256 delete all files: 4.54426288605 real 0m27.000s <- 3.2x FASTER than with NTFS 3g! user 0m3.287s sys 0m14.839s ===== mounted NTFS ===== /sbin/mount.ntfs --version ntfs-3g 2017.3.23AR.3 integrated FUSE 28 ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 14.6907 s, 146 MB/s real 0m14.698s user 0m0.000s sys 0m2.901s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 0.818364 s, 2.6 GB/s <- ok? (RAM caching) real 0m0.823s user 0m0.000s sys 0m0.629s ========== tidy up remove testfile ========== time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.380342006683 create files: 44.9138729572 rewrite files: 7.5974009037 read linear: 6.24533390999 read random: 8.19712495804 delete all files: rm: cannot remove 'test': Directory not empty <- also does not happen under ext4 14.9125750065 real 1m27.667s <- 3.2x (+70%) SLOWER than with ext4! also a lot of cpu usage! user 0m5.385s sys 0m19.761s

samsung ssd pro 850 on giada f302:

===== giada f302 bench samsung ssd 850 pro ===== time /scripts/bench/bench_harddisk.sh; # https://dwaves.de/scripts/bench/bench_harddisk.sh === starting harddisk sequential write and read bench v1 === no need to run it as root because dd is a very dangerous utility modify paths /media/user/to/mountpoint/ manually then run the script === show info on harddisk === Model Number: Samsung SSD 850 PRO 256GB Firmware Revision: EXM04B6Q Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6, SATA Rev 3.0 ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.53786 s, 388 MB/s real 0m5.543s user 0m0.000s sys 0m2.417s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 4.31814 s, 497 MB/s real 0m4.327s user 0m0.000s sys 0m1.621s ========== tidy up remove testfile ========== real 0m10.008s user 0m0.005s sys 0m4.172s ===== python bench with small files ===== time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.0197870731354 create files: 9.34433507919 rewrite files: 2.0522480011 read linear: 1.93751502037 read random: 3.44131994247 delete all files: 4.48000884056 real 0m27.600s <- wow! only 2% SLOWER than the more recent Kingston :) user 0m3.124s sys 0m14.886s

testing on giada f300:

hostnamectl Static hostname: giada Icon name: computer-desktop Chassis: desktop Operating System: Debian GNU/Linux 10 (buster) Kernel: Linux 4.19.0-11-amd64 Architecture: x86-64 su - root lshw -class tape -class disk -class storage

========== what harddisk / controllers are used ==========

*-sata

description: SATA controller

product: 8 Series SATA Controller 1 [AHCI mode]

vendor: Intel Corporation

physical id: 1f.2

bus info: pci@0000:00:1f.2

logical name: scsi0

version: 04

width: 32 bits

clock: 66MHz

capabilities: sata msi pm ahci_1.0 bus_master cap_list emulated

configuration: driver=ahci latency=0

resources: irq:45 ioport:f0b0(size=8) ioport:f0a0(size=4) ioport:f090(size=8) ioport:f080(size=4) ioport:f060(size=32) memory:f7e1a000-f7e1a7ff

benchmark script used:

can be run as non-root user:

#!/bin/bash

echo "=== starting harddisk sequential write and read bench v1 ==="

echo "no need to be run as root"

echo "========== writing 3GB of zeroes =========="

time dd if=/dev/zero of=./mountpoint/testfile bs=3G count=1 oflag=direct

echo "========== reading 6GB of zeroes =========="

time dd if=./mountpoint/testfile bs=3GB count=1 of=/dev/null

echo "========== tidy up remove testfile =========="

rm -rf ./mountpoint/testfile;

results:

harddisk1: toshiba 2.5 usb 3.0 connected, filesystem: ext4

about the harddisk:

hdparm -I /dev/sdb|grep Model -A3 ATA device, with non-removable media Model Number: TOSHIBA MQ01ABD050 Firmware Revision: AX001U Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6

benchmark result:

========== writing 3GB of zeroes to /media/user/mountpoint/testfile ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 25.6249 s, 83.8 MB/s real 0m27.466s user 0m0.008s sys 0m3.089s ========== reading 6GB of zeroes from /media/user/mountpoint/testfile ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 22.8702 s, 93.9 MB/s real 0m22.880s user 0m0.000s sys 0m1.923s

harddisk2: Samsung SSD 860 EVO 500GB, filesystem: ext4

about the harddisk:

hdparm -I /dev/sda|grep Model -A3 ATA device, with non-removable media Model Number: Samsung SSD 860 EVO 500GB Firmware Revision: RVT03B6Q Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6, SATA Rev 3.0

benchmark result:

=== starting harddisk sequential write and read bench v1 === no need to run it as root because dd is a very dangerous utility modify paths /media/user/to/mountpoint/ manually then run the script ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.46448 s, 393 MB/s real 0m5.474s user 0m0.001s sys 0m2.407s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 4.87677 s, 440 MB/s real 0m4.886s user 0m0.000s sys 0m1.260s ========== tidy up remove testfile ========== # testing conditions might not be exactly fair # as this ssd was also booted from # only really fair testing conditions are booting from separate drive or usb time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.00678896903992 create files: 10.6135139465 rewrite files: 2.76307606697 read linear: 3.14085292816 read random: 4.85474491119 delete all files: 5.91292095184 real 0m32.690s <- 17.40% slower than the KINGSTON SKC600512G user 0m3.614s sys 0m18.186s

harddisk3: Samsung SSD 870 EVO 1TB, filesystem: ext4

- upgrade! from KINGSTON SKC600512G (512GBytes) to Samsung SSD 870 1TB (it’s a bit of lvm2 hazzle)

- definately “feels” faster & more performant than the kingston 🙂

- (but that could also be, because the KINGSTON SKC600512G was almost full X-D)

about the harddisk:

hdparm -I /dev/sda ATA device, with non-removable media Model Number: Samsung SSD 870 EVO 1TB Serial Number: XXXXXXXXXX Firmware Revision: SVT02B6Q Transport: Serial, ATA8-AST, SATA 1.0a, SATA II Extensions, SATA Rev 2.5, SATA Rev 2.6, SATA Rev 3.0 Standards:

benchmark result:

time /scripts/bench/bench_harddisk.sh; # first run === harddisk sequential write and read bench v1 === starting test on the device that holds the current directory the user is in no need to run it as root ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.47841 s, 392 MB/s real 0m5.489s user 0m0.005s sys 0m2.576s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.47224 s, 392 MB/s real 0m5.481s user 0m0.000s sys 0m1.547s ========== tidy up remove testfile ========== real 0m11.118s <- -1.46% slower than the kingston reference on the first run user 0m0.009s sys 0m4.266s time /scripts/bench/bench_harddisk.sh; # second run 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.29553 s, 406 MB/s 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 4.98106 s, 431 MB/s real 0m10.446s<- +4.64% faster on the second run time /scripts/bench/bench_harddisk.sh; # third run 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.25046 s, 409 MB/s 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 5.18407 s, 414 MB/s <- real 0m10.602s <- still +3.22% faster on third run time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.00240206718445 create files: 11.0404560566 rewrite files: 2.73733401299 read linear: 3.20042109489 read random: 4.86669397354 delete all files: 6.0825278759 real 0m32.600s -> +8.91% faster than the KINGSTON user 0m3.486s sys 0m19.373s

about gnome disk utility:

apt show gnome-disk-utility

Package: gnome-disk-utility

Version: 3.30.2-3

Priority: optional

Section: admin

Maintainer: Debian GNOME Maintainers <pkg-gnome-maintainers ÄÄÄÄÄ TTTT lists.alioth.debian DOOOOT org>

Installed-Size: 6,660 kB

Depends: udisks2 (>= 2.7.6), dconf-gsettings-backend | gsettings-backend, libatk1.0-0 (>= 1.12.4), libc6 (>= 2.10), libcairo2 (>= 1.2.4), libcanberra-gtk3-0 (>= 0.25), libdvdread4 (>= 4.1.3), libgdk-pixbuf2.0-0 (>= 2.22.0), libglib2.0-0 (>= 2.43.2), libgtk-3-0 (>= 3.21.5), liblzma5 (>= 5.1.1alpha+20120614), libnotify4 (>= 0.7.0), libpango-1.0-0 (>= 1.18.0), libpangocairo-1.0-0 (>= 1.14.0), libpwquality1 (>= 1.1.0), libsecret-1-0 (>= 0.7), libsystemd0 (>= 209), libudisks2-0 (>= 2.7.6)

Breaks: gnome-settings-daemon (<< 3.24)

Homepage: https://wiki.gnome.org/Apps/Disks

Tag: admin::filesystem, hardware::storage, hardware::storage:cd,

hardware::storage:dvd, implemented-in::c, interface::graphical,

interface::x11, role::program, uitoolkit::gtk

Download-Size: 967 kB

APT-Manual-Installed: yes

APT-Sources: https://ftp.halifax.rwth-aachen.de/debian buster/main amd64 Packages

Description: manage and configure disk drives and media

GNOME Disks is a tool to manage disk drives and media:

.

* Format and partition drives.

* Mount and unmount partitions.

* Query S.M.A.R.T. attributes.

.

It utilizes udisks2

apt show udisks2

Package: udisks2

Version: 2.8.1-4

Priority: optional

Section: admin

Maintainer: Utopia Maintenance Team <pkg-utopia-maintainers ÄÄ TTT lists.alioth.debian DOOOTT org>

Installed-Size: 2,418 kB

Depends: dbus, libblockdev-part2, libblockdev-swap2, libblockdev-loop2, libblockdev-fs2, libpam-systemd, parted, udev, libacl1 (>= 2.2.23), libatasmart4 (>= 0.13), libblockdev-utils2 (>= 2.20), libblockdev2 (>= 2.20), libc6 (>= 2.7), libglib2.0-0 (>= 2.50), libgudev-1.0-0 (>= 165), libmount1 (>= 2.30), libpolkit-agent-1-0 (>= 0.99), libpolkit-gobject-1-0 (>= 0.101), libsystemd0 (>= 209), libudisks2-0 (>= 2.8.1)

Recommends: exfat-utils, dosfstools, e2fsprogs, eject, libblockdev-crypto2, ntfs-3g, policykit-1

Suggests: btrfs-progs, f2fs-tools, mdadm, libblockdev-mdraid2, nilfs-tools, reiserfsprogs, xfsprogs, udftools, udisks2-bcache, udisks2-btrfs, udisks2-lvm2, udisks2-vdo, udisks2-zram

Homepage: https://www.freedesktop.org/wiki/Software/udisks

Tag: admin::filesystem, admin::hardware, admin::monitoring,

hardware::detection, hardware::storage, hardware::storage:cd,

hardware::storage:dvd, hardware::usb, implemented-in::c,

interface::daemon, role::program

Download-Size: 369 kB

APT-Manual-Installed: no

APT-Sources: https://ftp.halifax.rwth-aachen.de/debian buster/main amd64 Packages

Description: D-Bus service to access and manipulate storage devices

The udisks daemon serves as an interface to system block devices,

implemented via D-Bus. It handles operations such as querying, mounting,

unmounting, formatting, or detaching storage devices such as hard disks

or USB thumb drives.

This package also provides the udisksctl utility, which can be used to

trigger these operations from the command line (if permitted by

PolicyKit).

Creating or modifying file systems such as XFS, RAID, or LUKS encryption

requires that the corresponding mkfs.* and admin tools are installed, such as

dosfstools for VFAT, xfsprogs for XFS, or cryptsetup for LUKS.

- udisksctl — The udisks command line tool

- udisksd — The udisks system daemon

- udisks — Disk Manager

- umount.udisks2 — unmount file systems that have been mounted by UDisks2

- udisks2.conf — The udisks2 configuration file

- udisks2_lsm.conf — The UDisks2 LSM Module configuration file

- Configurable mount options

- src: http://storaged.org/doc/udisks2-api/latest/

comment:

the USB 3.0 connected Toshiba ext harddisk (2.5) performs pretty well reaching 93.9 MB/s, of course it can not compete with an SATA internal connected SSDs reaching more than 300 MB/s during write and read.

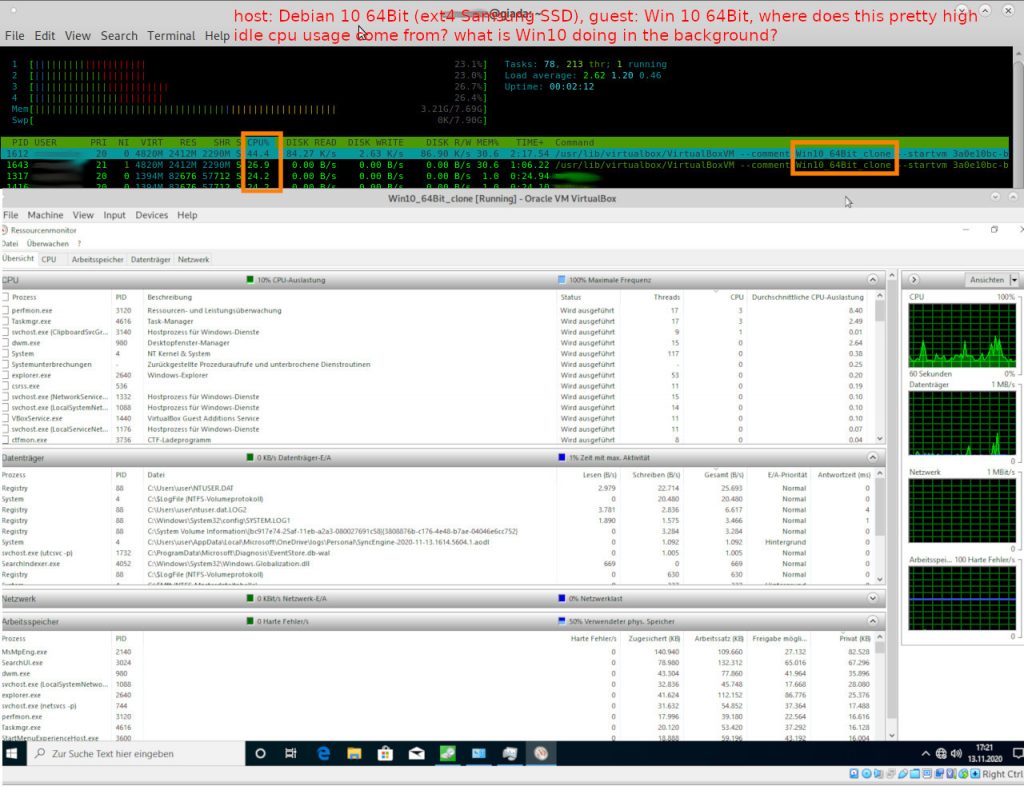

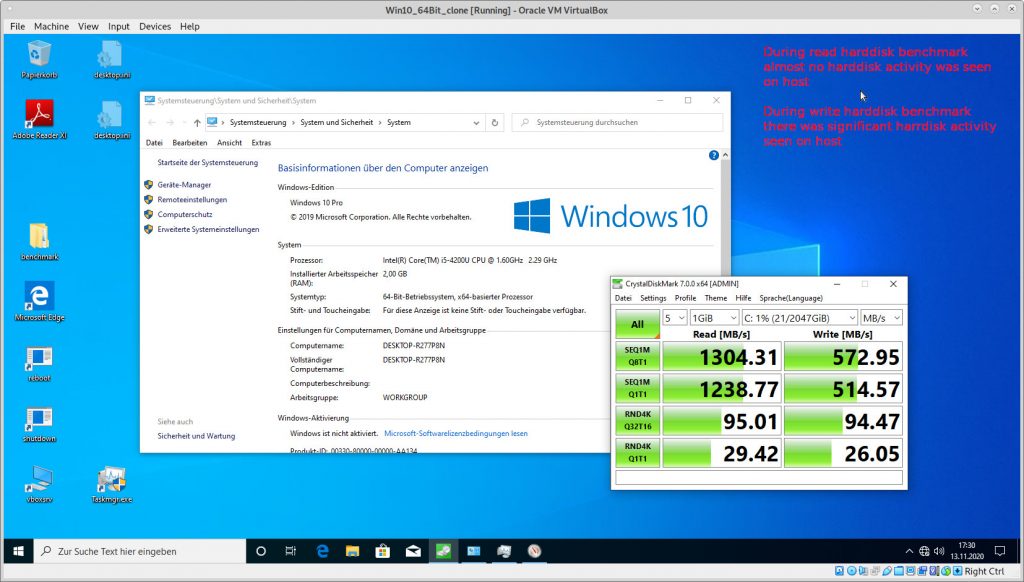

how fast are virtual harddisks?

boot up time for the virtual Win 10 64 guest was 40 sec (from power on to desktop), not pretty but okay. (too long imho)

so one knows, that the theoretical speed of a virtual harddisk, should not be greater than that of the real harddisk.

but: there is a lot of RAM caching going on, so the virtual harddisk speeds even exceed the real harddisk speeds.

CrystalDisk Harddisk Benchmark – Win 10 64 Bit as VirtualBox guest on Debian 10 64Bit host (samsung ssd) – i-o host caching active (!)

during read, almost all read access was RAM cached (not much activity on the harddisk LED)

during write, significant harddisk activity was seen.

also notable: Win 10 VirtualBox guest produces (imho too much) CPU usage, when idle

the cause of this is unkown (is it MINING BITCOINS in the background that then get transferred to Micro$oft? :-p)

this could cause an issue when having many Win 10 vms running in parallel, because it could result in an overall slowdown of the host system

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!