once upon a time, compressing massive amounts of binary was required.

hostnamectl; # tested on Operating System: Debian GNU/Linux 12 (bookworm) Kernel: Linux 6.1.0-12-amd64 Architecture: x86-64 lscpu | grep -E 'Architecture|Model name|Thread|Core'; # tested on CPU Architecture: x86_64 Model name: AMD Ryzen 5 5600G with Radeon Graphics Thread(s) per core: 2 Core(s) per socket: 6 # the challenge compress a large binary file # as much as possible # show filesize in megabytes (default is kilobytes) du --block-size=1M very.big.binary.file.img 29341 very.big.binary.file.img (28.65GBytes)

xz:

# how to compress a directory with all it's files tar cJvf dir.xz dir # for odd reason, it was not able to use automatically use all threads of all cores with -T0 # so manually check how many threads-cpus are there with htop and specify # (here -vT12 = run with 12 threads for maximum speed) # -k keep the original file (create a new file tih .img.xz) # -9ve - compression level 9 (max) with extreme parameter time xz -vT12 -k -9ve very.big.binary.file.img # sample output xz: Filter chain: --lzma2=dict=64MiB,lc=3,lp=0,pb=2,mode=normal,nice=273,mf=bt4,depth=512 xz: Using up to 12 threads. xz: 14,990 MiB of memory is required. The limiter is disabled. xz: Decompression will need 65 MiB of memory. very.big.binary.file.img (1/1) 100 % 12.6 GiB / 28.7 GiB = 0.440 31 MiB/s 15:48 real 15m49.359s <- time it took du --block-size=1M very.big.binary.file.img.xz 12907 very.big.binary.file.img.xz # equals a compression ratio bc <<< "scale=5;12907/(29341/100)" 43.98963% # test integrity of compressed file time xz -vT12 -tv very.big.binary.file.img.xz real 0m16.757s; # took 16.75seconds # to decompress-uncompress time unxz -vT12 very.big.binary.file.img.xz

from the xz manpage

-e, --extreme

Use a slower variant of the selected compression preset level (-0 … -9) to hopefully get a little bit better compression ratio,

but with bad luck this can also make it worse. Decompressor memory usage is not affected, but compressor memory usage increases a little at preset levels -0 … -3.

Since there are two presets with dictionary sizes 4 MiB and 8 MiB, the presets -3e and -5e use slightly faster settings (lower CompCPU) than -4e and -6e, respectively.

That way no two presets are identical.

Preset DictSize CompCPU CompMem DecMem

-0e 256 KiB 8 4 MiB 1 MiB

-1e 1 MiB 8 13 MiB 2 MiB

-2e 2 MiB 8 25 MiB 3 MiB

-3e 4 MiB 7 48 MiB 5 MiB

-4e 4 MiB 8 48 MiB 5 MiB

-5e 8 MiB 7 94 MiB 9 MiB

-6e 8 MiB 8 94 MiB 9 MiB

-7e 16 MiB 8 186 MiB 17 MiB

-8e 32 MiB 8 370 MiB 33 MiB

-9e 64 MiB 8 674 MiB 65 MiB

For example, there are a total of four presets that use 8 MiB dictionary, whose order from the fastest to the slowest is -5, -6, -5e, and -6e.

pbzip2:

is the parallel capable version of pbzip2

manpage: bzip2.man.txt, pbzip2.man.txt

also interesting: while students managed to parallelize bzip2

su - root apt install pbzip2 Ctrl+D # log off root # -k keep original file # -v verbose output # -9 max compression level time pbzip2 -k -v -9 very.big.binary.file.img

sample output:

Parallel BZIP2 v1.1.13 [Dec 18, 2015] By: Jeff Gilchrist [http://compression.ca] Major contributions: Yavor Nikolov [http://javornikolov.wordpress.com] Uses libbzip2 by Julian Seward # CPUs: 12 BWT Block Size: 900 KB File Block Size: 900 KB Maximum Memory: 100 MB ------------------------------------------- File #: 1 of 1 Input Name: very.big.binary.file.img Output Name: very.big.binary.file.img.bz2 Input Size: 30765219840 bytes Compressing data... Output Size: 13706448532 bytes ------------------------------------------- Wall Clock: 268.551870 seconds real 4m28.555s du --block-size=1M very.big.binary.file.img.bz2 13072 very.big.binary.file.img.bz2 bc <<< "scale=5;13072/(29341/100)" 44.55199%

7zip:

manpage: 7z.man.txt

# multithreading is on by default time 7z a -mx=9 -mmt=on very.big.binary.file.img.7z very.big.binary.file.img real 16m42.875s # so the compression ratio is bc <<< "scale=5;12903/(29341/100)" 43.97600%

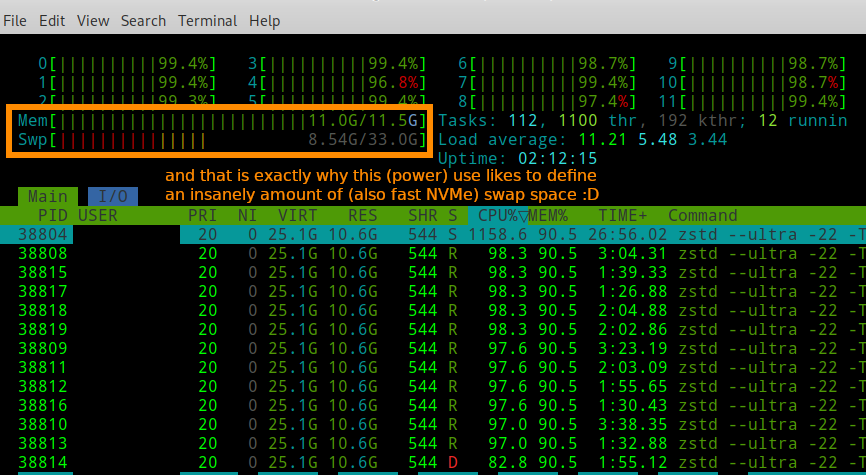

zstd:

manpage: zstd.man.txt

# --ultra -22 max compression level # -T12 use 12 threads # -k keep original file time zstd --ultra -22 -T12 -k very.big.binary.file.img real 19m47.879s # show filesize du --block-size=1M very.big.binary.file.img 29341 very.big.binary.file.img du --block-size=1M very.big.binary.file.img.zst 12784 very.big.binary.file.img.zst # so the compression ratio is bc <<< "scale=5;12784/(29341/100)" 43.57043% # decompress unzstd very.big.binary.file.img.zst

results:

so all algorithms struggled to compress the (mostly binary data) file and managed to squeeze it down ~50%

while zstd won in terms of “maximum compression” it was super impressive how fast bzip2 accomplished the compression 😀

“maximum compression” toplist:

- 12784 MBytes (zstd)

- 12903 MBytes (7z)

- 12907 MBytes (xz)

- 13072 MBytes (pbzip2) (compressed file +2.20% larger than zstd)

related links:

https://www.rootusers.com/13-simple-xz-examples/

https://linuxnightly.com/what-is-the-best-compression-tool-in-linux/

https://linuxreviews.org/Comparison_of_Compression_Algorithms

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!