UPDATE: 2022

may have to appoligize to HighPoint and ALTERNATE, as other RAID systems such as the AMD RAIDXpert2 based systems (same concept) are also STALLING during ATTO Disk Benchmark in Direct I/O mode.

So the ATTO Benchmark MIGHT be the problem here 😀

HighPoint will have to fight a long time, to make up to the “well known” brands such as LSI and ADAPTEC when it comes to RAID cards, but at least they try.

If admins want to upgrade old servers to NVMe RAID(10 preferably), check out the WAY more expensive ADAPTEC SmartRaid 3200 family coming out soon for purchase (untested!, see specs here https://storage.microsemi.com/en-us/support/smartraid3200/)

- 3200 is the new Trimode family supporting SAS, SATA and NMVe devices

if RAID1 is sufficient and replacing mobos is an option, then:

- get a mobo that has at least 2x NVMe ports on-board

- some vendors BIOS can do RAID0 and RAID1

- otherwise use GNU Linux software raid mdadm for reliable RAID and (probably) similar (?) performance

- e.g. from SuperMicro (very solid servers)

- a Supermicro X11DPH-T was used in this shaky video here

- ASUS video how to build bootable NVMe RAID on ASUS mobo

- or other trusted mobo vendors such as GIGABYTE

back to the new LowPoint of HighPoint, some cards are reported to have died the heat-death, with HighPoint giving no good RMA-support.

for those users that could not be scared away… it actually works with MDADM software RAID under GNU Linux Debian 10 but at half the heat and half the speed.

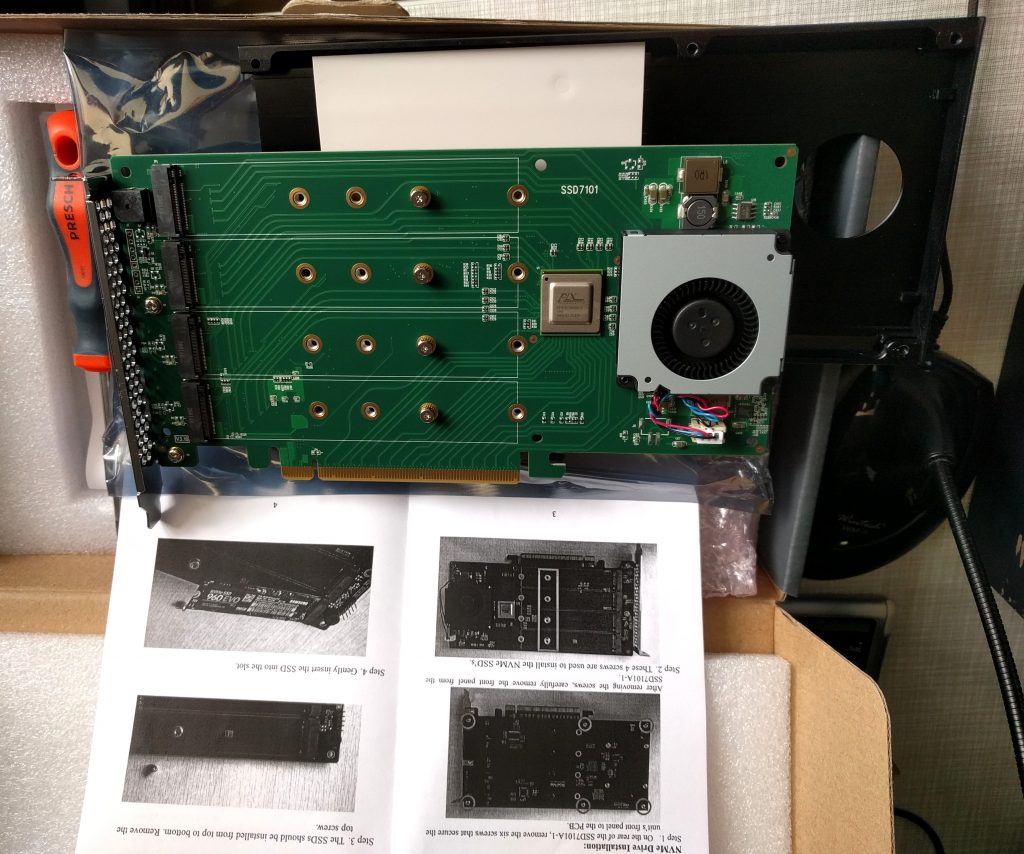

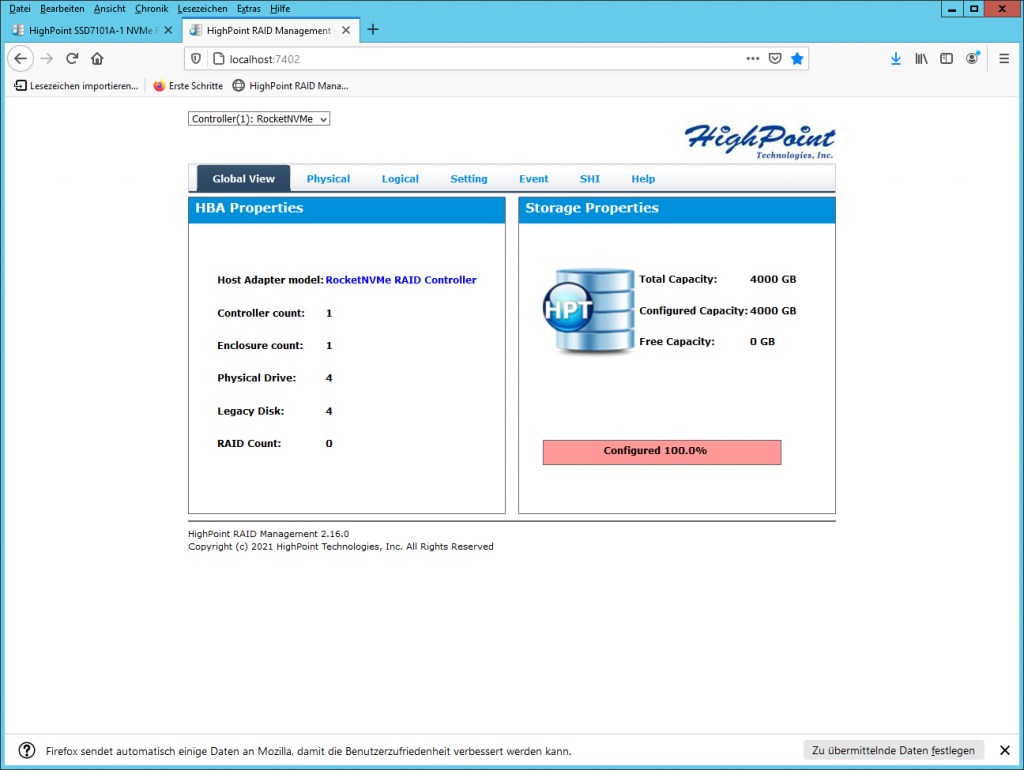

https://highpoint-tech.com/USA_new/series-ssd7101a-1-overview.htm

this card + x16 PCIe slot + NVMes promise MASSIVE harddisk speeds 🙂

- for Mac/OSX users this card seems to be a good pick

- for GNU Linux users at the current state (faulty drivers that do not compile) it is just an expensive NVMe riser card… it works with mdadm software raid but does not deliver the promised speeds

- for Windows (Server 2012 R2) users, at the current state (faulty drivers) it can not be recommended (see screenshots at the very bottom)

possible alternatives?

- not from renowed Adaptec / Microsemi: “Microsemi RAID adapters and controllers (ROCs) supporting NVMe architecture will sample soon.” (src)

maybe just get a simple NVMe PCI card and go with the in-build software-raid of GNU Linux (mdadm) or Windows.

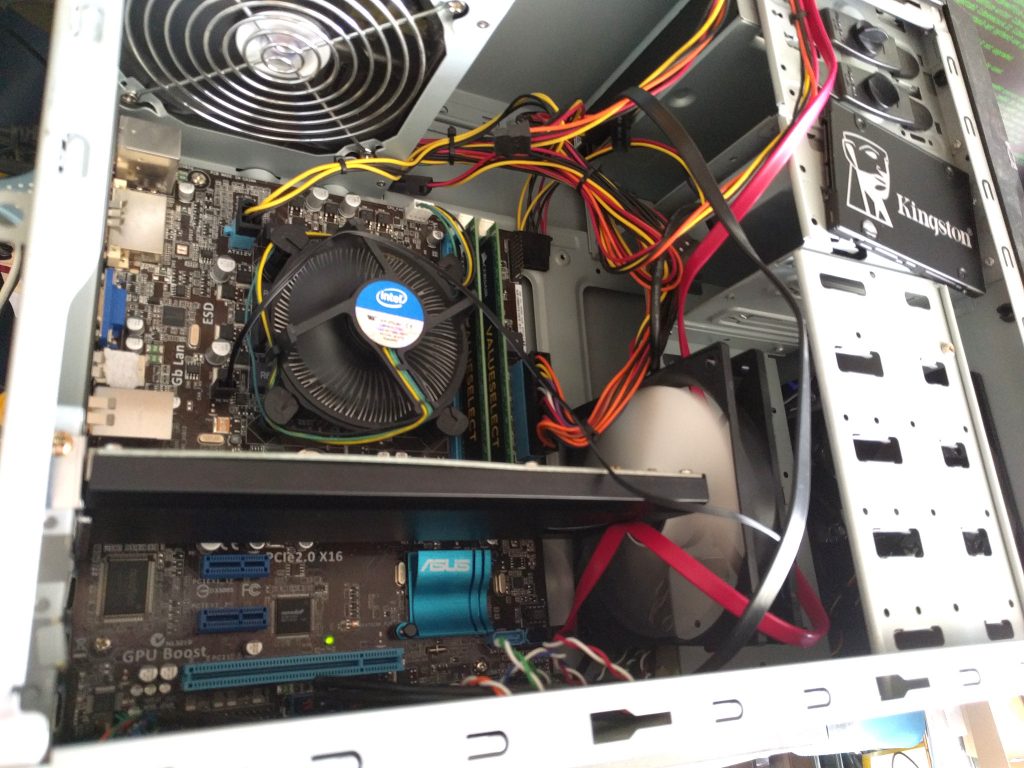

TestPC was Asus P8H61M_LEUSB3 (https://origin-www.asus.com/Motherboards/P8H61M_LEUSB3/overview/)

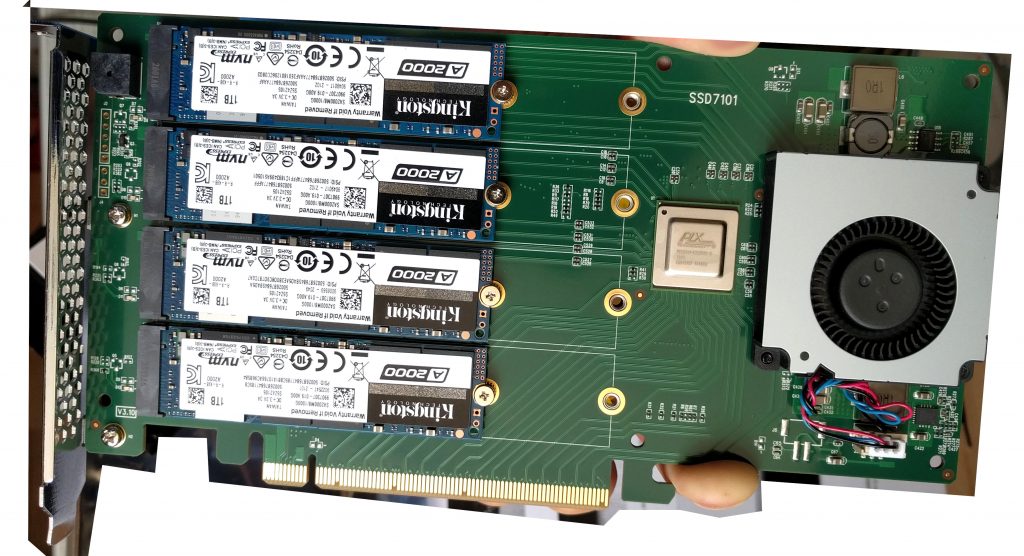

what is really nice are the 1TB Kingston NVMe (KINGSTON SA2000M81000G) blue harddisk activityLEDs 🙂

and yes…

- each Kingston NVMe has:

- 2GByte/1Sec (sequential read)

- 1Gbyte/1Sec (sequential write)

- mdadm (GNU Linux software) RAID10: … see below…

- RAID10 with the HighPoint drivers … was unable to compile (yet)

but it is probably better anyway to let GNU Linux MDADM handle the RAID 🙂

WARNING! for whatever reason the USB keyboard AND MOUSE stopped working, after installing this card… X-D

luckily this a bit old Asus mobo still had PS2 keyboard-mouse combi port, so it might be smart to assign a fixed ip to the TestPC and ssh into it, BEFORE installing the card

here is the complete dmesg.txt of that problem

hint!

some of those benchmarks are too CPU heavy… so with a faster CPU (it is only a i3) it might be possible to achieve even faster speeds in mdadm RAID10

tested on:

hostnamectl; # latest GNU Linux Debian 10 Operating System: Debian GNU/Linux 10 (buster) Kernel: Linux 4.19.0-16-amd64 Architecture: x86-64 lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian Address sizes: 36 bits physical, 48 bits virtual CPU(s): 4 On-line CPU(s) list: 0-3 Thread(s) per core: 2 Core(s) per socket: 2 Socket(s): 1 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 42 Model name: Intel(R) Core(TM) i3-2120 CPU @ 3.30GHz Stepping: 7 CPU MHz: 3291.877 CPU max MHz: 3300.0000 CPU min MHz: 1600.0000 BogoMIPS: 6583.57 Virtualization: VT-x L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 3072K NUMA node0 CPU(s): 0-3 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 cx16 xtpr pdcm pcid sse4_1 sse4_2 popcnt tsc_deadline_timer xsave avx lahf_lm epb pti tpr_shadow vnmi flexpriority ept vpid xsaveopt dtherm arat pln pts cat /etc/debian_version 10.9 # even without the vendor driver GNU Linux manages to access the NVMes lsblk -fs NAME FSTYPE LABEL UUID FSAVAIL FSUSE% MOUNTPOINT nvme0n1 nvme1n1 nvme2n1 nvme3n1

info about the card: HighPoint SSD7101A-1

On August 12, 2014, Broadcom Inc. acquired the company.[21] (src: Wiki)

lspci; # the PEX 8747 is from BroadCom # product overview: PEX8747_Product_Brief_v1-0_20Oct10.pdf 01:00.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) 02:08.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) 02:09.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) 02:10.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) 02:11.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) 03:00.0 Non-Volatile memory controller: Kingston Technologies Device 2263 (rev 03) 04:00.0 Non-Volatile memory controller: Kingston Technologies Device 2263 (rev 03) 05:00.0 Non-Volatile memory controller: Kingston Technologies Device 2263 (rev 03) 06:00.0 Non-Volatile memory controller: Kingston Technologies Device 2263 (rev 03) lspci -vvv 01:00.0 PCI bridge: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch (rev ca) (prog-if 00 [Normal decode]) Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx- Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- SERR- TAbort- Reset- FastB2B- PriDiscTmr- SecDiscTmr- DiscTmrStat- DiscTmrSERREn- Capabilities: [40] Power Management version 3 Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot+,D3cold+) Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME- Capabilities: [48] MSI: Enable- Count=1/8 Maskable+ 64bit+ Address: 0000000000000000 Data: 0000 Masking: 00000000 Pending: 00000000 Capabilities: [68] Express (v2) Upstream Port, MSI 00 DevCap: MaxPayload 2048 bytes, PhantFunc 0 ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ SlotPowerLimit 75.000W DevCtl: Report errors: Correctable- Non-Fatal- Fatal- Unsupported- RlxdOrd- ExtTag- PhantFunc- AuxPwr- NoSnoop+ MaxPayload 128 bytes, MaxReadReq 128 bytes DevSta: CorrErr+ UncorrErr- FatalErr- UnsuppReq+ AuxPwr- TransPend- LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L0s <2us, L1 <4us ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+ LnkCtl: ASPM Disabled; Disabled- CommClk- ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt- LnkSta: Speed 5GT/s, Width x16, TrErr- Train- SlotClk- DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Not Supported, TimeoutDis-, LTR+, OBFF Via message DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-, LTR-, OBFF Disabled LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance De-emphasis: -6dB LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete-, EqualizationPhase1- EqualizationPhase2-, EqualizationPhase3-, LinkEqualizationRequest- Capabilities: [a4] Subsystem: PLX Technology, Inc. PEX 8747 48-Lane, 5-Port PCI Express Gen 3 (8.0 GT/s) Switch Capabilities: [100 v1] Device Serial Number ca-87-00-10-b5-df-0e-00 Capabilities: [fb4 v1] Advanced Error Reporting UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol- UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol- CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr+ CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr+ AERCap: First Error Pointer: 1f, GenCap+ CGenEn- ChkCap+ ChkEn- Capabilities: [138 v1] Power Budgeting <?> Capabilities: [10c v1] #19 Capabilities: [148 v1] Virtual Channel Caps: LPEVC=0 RefClk=100ns PATEntryBits=8 Arb: Fixed- WRR32- WRR64- WRR128- Ctrl: ArbSelect=Fixed Status: InProgress- VC0: Caps: PATOffset=03 MaxTimeSlots=1 RejSnoopTrans- Arb: Fixed- WRR32- WRR64+ WRR128- TWRR128- WRR256- Ctrl: Enable+ ID=0 ArbSelect=WRR64 TC/VC=01 Status: NegoPending- InProgress- Port Arbitration Table <?> Capabilities: [e00 v1] #12 Capabilities: [b00 v1] Latency Tolerance Reporting Max snoop latency: 0ns Max no snoop latency: 0ns Capabilities: [b70 v1] Vendor Specific Information: ID=0001 Rev=0 Len=010 <?> Kernel driver in use: pcieport ... # and the NVMe 03:00.0 Non-Volatile memory controller: Kingston Technologies Device 2263 (rev 03) (prog-if 02 [NVM Express]) Subsystem: Kingston Technologies Device 2263 Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+ Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- SERR- dmesg|grep 01:00.0 [ 0.296868] pci 0000:01:00.0: [10b5:8747] type 01 class 0x060400 [ 0.296884] pci 0000:01:00.0: reg 0x10: [mem 0xf7c00000-0xf7c3ffff] [ 0.296942] pci 0000:01:00.0: PME# supported from D0 D3hot D3cold [ 0.296965] pci 0000:01:00.0: 64.000 Gb/s available PCIe bandwidth, limited by 5 GT/s x16 link at 0000:00:01.0 (capable of 126.016 Gb/s with 8 GT/s x16 link) [ 0.307565] pci 0000:01:00.0: PCI bridge to [bus 02-06] [ 0.307571] pci 0000:01:00.0: bridge window [mem 0xf7800000-0xf7bfffff] [ 0.388724] pci 0000:01:00.0: bridge window [io 0x1000-0x0fff] to [bus 02-06] add_size 1000 [ 0.388725] pci 0000:01:00.0: bridge window [mem 0x00100000-0x001fffff 64bit pref] to [bus 02-06] add_size 200000 add_align 100000 [ 0.388759] pci 0000:01:00.0: BAR 15: assigned [mem 0xdfb00000-0xdfdfffff 64bit pref] [ 0.388762] pci 0000:01:00.0: BAR 13: assigned [io 0x2000-0x2fff] [ 0.388814] pci 0000:01:00.0: PCI bridge to [bus 02-06] [ 0.388816] pci 0000:01:00.0: bridge window [io 0x2000-0x2fff] [ 0.388820] pci 0000:01:00.0: bridge window [mem 0xf7800000-0xf7bfffff] [ 0.388823] pci 0000:01:00.0: bridge window [mem 0xdfb00000-0xdfdfffff 64bit pref]

info about the 1TB Kingston NVMe (SA2000M81000G)

smartctl -H /dev/nvme0n1 smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.19.0-16-amd64] (local build) Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED smartctl -i /dev/nvme0n1 smartctl 6.6 2017-11-05 r4594 [x86_64-linux-4.19.0-16-amd64] (local build) Copyright (C) 2002-17, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: KINGSTON SA2000M81000G Serial Number: X Firmware Version: S5Z42105 PCI Vendor/Subsystem ID: 0x2646 IEEE OUI Identifier: 0x0026b7 Controller ID: 1 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,000,204,886,016 [1.00 TB] Namespace 1 Utilization: 13,348,388,864 [13.3 GB] Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 0026b7 6847185c85 Local Time is: Wed May 19 23:58:32 2021 CEST

benchmark 1TB Kingston NVMe (SA2000M81000G): sequential

this is: creating ext4 on one of the 1TB Kingston NVMes and benchmarking only this single device

download the script here: bench_harddisk.sh.zip

time /scripts/bench/bench_harddisk.sh === harddisk sequential write and read bench v1 === starting test on the device that holds the current directory the user is in no need to run it as root ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 2.33577 s, 919 MB/s real 0m2.342s user 0m0.001s sys 0m1.876s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 1.18685 s, 1.8 GB/s real 0m1.193s user 0m0.000s sys 0m0.799s ========== tidy up remove testfile ========== real 0m3.656s user 0m0.004s sys 0m2.792s

benchmark 1TB Kingston NVMe (SA2000M81000G): many small python generated files

this is: creating ext4 on one of the 1TB Kingston NVMes and benchmarking only this single device

for comparison, the Samsung SSD 860 EVO 500GB took: real 0m36.163s

download the script here: bench_harddisk_small_files.py.zip

time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.00545001029968 create files: 9.2529399395 rewrite files: 1.83215284348 read linear: 1.67627692223 read random: 2.59832310677 delete all files: 4.72371888161 real 0m23.995s (-33% faster than the SSD 860 EVO 500GB) user 0m3.380s sys 0m14.668s

bench in MDADM (GNU Linux software) RAID10

now to the mdadm RAID10 benchmarks:

# create the raid mdadm --create /dev/md0 --level=10 --raid-devices=4 /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 mkfs.ext4 -L "ext4RAID10" /dev/md0 # wait till finished cat /proc/mdstat; Personalities : [raid10] md0 : active raid10 nvme3n1[3] nvme2n1[2] nvme1n1[1] nvme0n1[0] 1953260544 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU] [>....................] resync = 1.1% (23010240/1953260544) finish=163.9min speed=196278K/sec bitmap: 15/15 pages [60KB], 65536KB chunk # when finished it looks like this nvme1n1 259:0 0 931.5G 0 linux_raid_member 347f7cac-b875-ca2e-489a-06ae968510c6 └─md0 9:0 0 1.8T 0 ext4 /media/user/md0 7d2d0f03-f1f7-4852-bcef-5f0f06659ca9 nvme0n1 259:1 0 931.5G 0 linux_raid_member 347f7cac-b875-ca2e-489a-06ae968510c6 └─md0 9:0 0 1.8T 0 ext4 /media/user/md0 7d2d0f03-f1f7-4852-bcef-5f0f06659ca9 nvme3n1 259:2 0 931.5G 0 linux_raid_member 347f7cac-b875-ca2e-489a-06ae968510c6 └─md0 9:0 0 1.8T 0 ext4 /media/user/md0 7d2d0f03-f1f7-4852-bcef-5f0f06659ca9 nvme2n1 259:3 0 931.5G 0 linux_raid_member 347f7cac-b875-ca2e-489a-06ae968510c6 └─md0 9:0 0 1.8T 0 ext4 /media/user/md0 7d2d0f03-f1f7-4852-bcef-5f0f06659ca9 =========== harddisk usage /dev/md0 1.8T 77M 1.7T 1% /media/user/md0 # big sequential files time /scripts/bench/bench_harddisk.sh === harddisk sequential write and read bench v1 === starting test on the device that holds the current directory the user is in no need to run it as root ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 2.289 s, 938 MB/s real 0m2.298s user 0m0.000s sys 0m1.949s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 0.855744 s, 2.5 GB/s real 0m0.862s user 0m0.008s sys 0m0.853s ========== tidy up remove testfile ========== real 0m3.291s <- jep , so here it is -10% faster user 0m0.008s sys 0m2.925s # a lot of small files bench time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.00363707542419 create files: 9.89691901207 rewrite files: 1.9919090271 read linear: 1.61445593834 read random: 2.6266078949 delete all files: 4.63190793991 1st run: real 0m24.858s <- the NVMes in mdadm-RAID10 is +3.59658% slower than tested individually (no RAID) 2nd run: real 0m24.737s 3nd run: real 0m24.624s # thats kind of unexpected # because RAID10 SHOULD actually double the speed # while adding redundancy

another test that could be performed:

create a 10Gbyte test-file with random data (rsync (no matter the compression level?) does not seem to be a good benchmark because it is cpu heavy)

# here the cpu is the limiting factor time head -c 10240m /dev/zero | openssl enc -aes-128-cbc -pass pass:"$(head -c 20 /dev/urandom | base64)" > testfile real 0m49.385s user 0m37.556s sys 0m18.791s

# and simply copy it from fileA to fileB within the device du -hs testfile 11G testfile du -s testfile 10485768 testfile # copy big file A to big file B (on same device) time cp -rv testfile testfile2 'testfile' -> 'testfile2' real 0m7.801s <- so it copied that file onto itself with 1.28188 GBytes / s user 0m0.033s sys 0m7.664s # lets check that the copy process was done correctly time md5sum testfile* 9596d43e1385d895924ecd66c015b46e testfile 9596d43e1385d895924ecd66c015b46e testfile2 # yep same files with same content real 0m43.033s <- cpu again the limiting factor here user 0m37.943s sys 0m5.088s #sysbench sysbench fileio prepare sysbench 1.0.20 (using bundled LuaJIT 2.1.0-beta2) 128 files, 16384Kb each, 2048Mb total Creating files for the test... Extra file open flags: (none) Creating file test_file.0 Creating file test_file.1 ...6 Creating file test_file.7 Creating file test_file.8 ... Creating file test_file.19 Creating file test_file.20 Creating file test_file.21 Creating file test_file.22 Creating file test_file.23 Creating file test_file.24 ... Creating file test_file.37 Creating file test_file.38 Creating file test_file.39 Creating file test_file.40 Creating file test_file.41 ... Creating file test_file.56 Creating file test_file.57 Creating file test_file.58 Creating file test_file.59 ... Creating file test_file.123 Creating file test_file.124 Creating file test_file.125 Creating file test_file.126 Creating file test_file.127 2147483648 bytes written in 2.41 seconds (848.94 MiB/sec). time sysbench fileio --file-test-mode=rndrw run sysbench 1.0.20 (using bundled LuaJIT 2.1.0-beta2) Running the test with following options: Number of threads: 1 Initializing random number generator from current time Extra file open flags: (none) 128 files, 16MiB each 2GiB total file size Block size 16KiB Number of IO requests: 0 Read/Write ratio for combined random IO test: 1.50 Periodic FSYNC enabled, calling fsync() each 100 requests. Calling fsync() at the end of test, Enabled. Using synchronous I/O mode Doing random r/w test Initializing worker threads... Threads started! ee File operations: reads/s: 4296.19 writes/s: 2864.13 fsyncs/s: 9169.20 Throughput: read, MiB/s: 67.13 <- that would be very bad X-D written, MiB/s: 44.75 General statistics: total time: 10.0089s total number of events: 163388 Latency (ms): min: 0.00 avg: 0.06 max: 10.93 95th percentile: 0.27 sum: 9926.76 Threads fairness: events (avg/stddev): 163388.0000/0.00 execution time (avg/stddev): 9.9268/0.00 real 0m10.024s user 0m0.222s sys 0m1.174s # tidy up afterwards rm -rf test_file*

testing another benchmark https://unix.stackexchange.com/questions/93791/benchmark-ssd-on-linux-how-to-measure-the-same-things-as-crystaldiskmark-does-i

apt install fio /scripts/bench/bench_harddisk_fio.sh What drive do you want to test? (Default: /root on /dev/mapper ) Only directory paths (e.g. /home/user/) are valid targets. Use bash if you want autocomplete. /media/user/md0/ How many times to run the test? (Default: 5) How large should each test be in MiB? (Default: 1024) Only multiples of 32 are permitted! Do you want to write only zeroes to your test files to imitate dd benchmarks? (Default: 0) Enabling this setting may drastically alter your results, not recommended unless you know what you're doing. Would you like to include legacy tests (512kb & Q1T1 Sequential Read/Write)? [Y/N] cat: /sys/block/md/device/model: No such file or directory cat: /sys/block/md/size: No such file or directory /scripts/bench/bench_harddisk_fio.sh: line 132: *512/1024/1024/1024: syntax error: operand expected (error token is "*512/1024/1024/1024") Running Benchmark on: /dev/md, (), please wait... Results: Sequential Q32T1 Read: 5736MB/s [ 175 IOPS] Sequential Q32T1 Write: 2857MB/s [ 87 IOPS] 4KB Q8T8 Read: 1475MB/s [ 368807 IOPS] 4KB Q8T8 Write: 816MB/s [ 204230 IOPS] 4KB Q32T1 Read: 615MB/s [ 153984 IOPS] 4KB Q32T1 Write: 435MB/s [ 108936 IOPS] 4KB Read: 61MB/s [ 15485 IOPS] 4KB Write: 197MB/s [ 49408 IOPS] Would you like to save these results? [Y/N] Saving at /root/md2021-05-20110739.txt # it is always important to watch the cpu not being the limiting factor # or it would make for an unfair bench hdparm -tT /dev/md0 /dev/md0: Timing cached reads: 9810 MB in 2.00 seconds = 4911.95 MB/sec HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device Timing buffered disk reads: 7790 MB in 3.00 seconds = 2596.34 MB/sec hdparm -tT /dev/md0 /dev/md0: Timing cached reads: 10560 MB in 2.00 seconds = 5288.32 MB/sec HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device Timing buffered disk reads: 7834 MB in 3.00 seconds = 2611.05 MB/sec

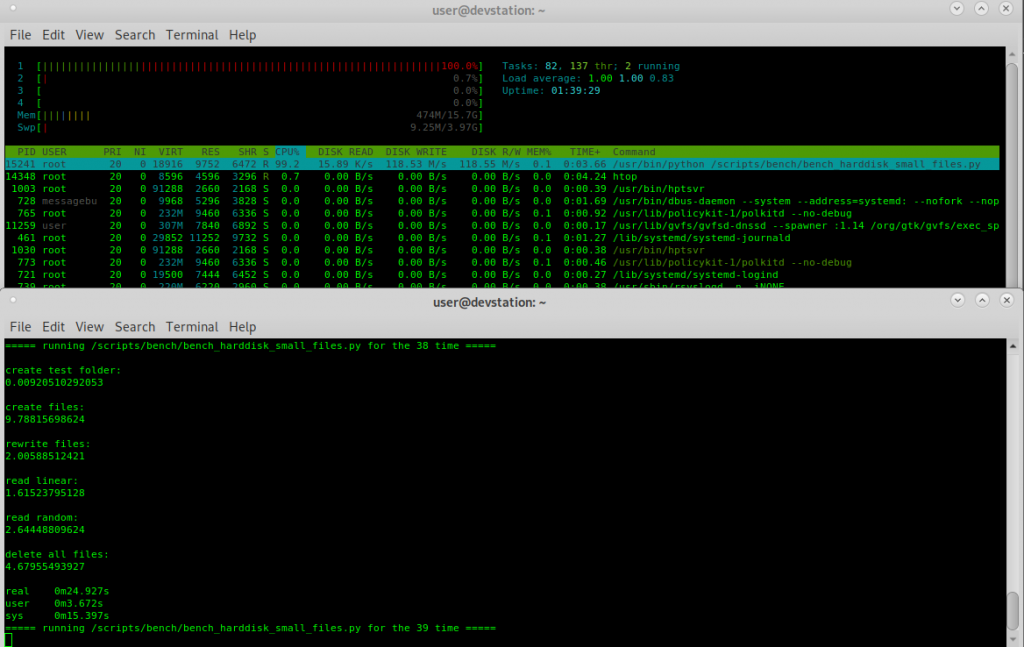

now: let’s do some long term stress testing

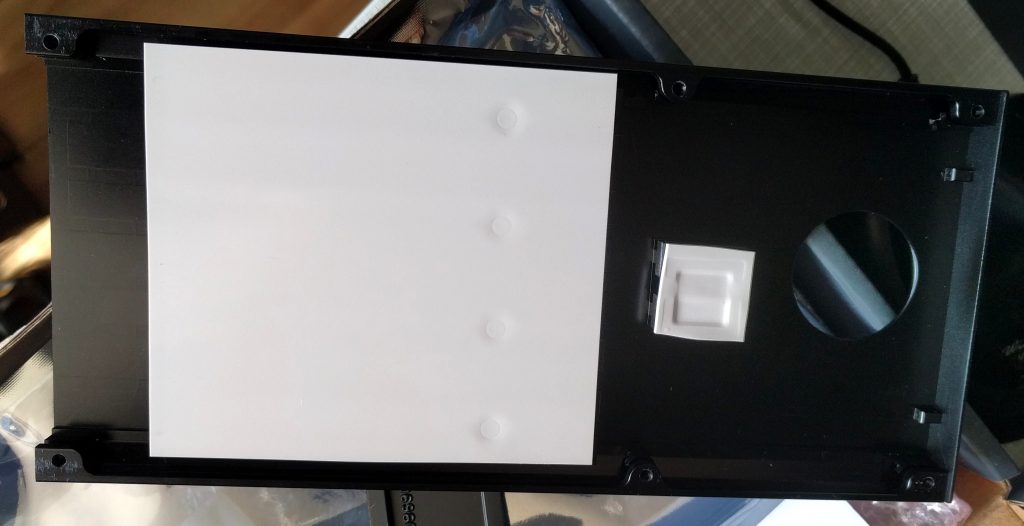

as it is said, that those cards can become very hot

and RAID cards that “burn through” and lose all data ain’t no good for any production use

after 100x iterations the card became warm, but not hot. (as seen in the pic above, an additional 12cm cooler was put right in front of it for additional cooling, so that might have helped too)

cat /scripts/bench/often.sh #!/bin/bash echo "=== doing long term testing of harddisk ===" > /scripts/bench/bench_harddisk_small_files.py.log for ((n=1; n<=100; n++)) do echo "===== running /scripts/bench/bench_harddisk_small_files.py for the $n time =====" >> /scripts/bench/bench_harddisk_small_files.py.log time /scripts/bench/bench_harddisk_small_files.py >> /scripts/bench/bench_harddisk_small_files.py.log done # where to write the files cd /media/user/md0 # start the marathon benchmark /scripts/bench/often.sh & [1] 13841 # watch what it is doing tail -f /scripts/bench/bench_harddisk_small_files.py.log

the card and the NVMes handled this bench with ease… the card got a little warm, but not hot (as many users reported, this is largely because GNU Linux mdadm (software raid aka the CPU) does all the RAID-work and the PLX chip is actually not-in-used)

This explains why the speed is HALF (RAID10 should be roughly double the speed of a single harddisk or NVMe in this case) of what would have been expected.

This is because there are actually no known good-drivers for GNU Linux Debian 10 for this card, compiling drivers for this card resulted in an error.

so let’s take it to the next level.

removed the addition 12cm cooler in front of it (not the one on the board… just the white one placed on the right side of the card)

and stressed card + NVMes like this…

cat /scripts/bench/often_sequential.sh #!/bin/bash echo "=== doing long term testing of harddisk ===" > /scripts/bench/bench_harddisk.sh.log for ((n=1; n<=300; n++)) do echo "===== running /scripts/bench/bench_harddisk.sh for the $n time =====" >> /scripts/bench/bench_harddisk.sh.log time /scripts/bench/bench_harddisk.sh >> /scripts/bench/bench_harddisk.sh.log done # run it like this /scripts/bench/often_sequential.sh & tail -f /scripts/bench/bench_harddisk.sh.log # to get output like this ===== running /scripts/bench/bench_harddisk.sh for the 17 time ===== === harddisk sequential write and read bench v1 === starting test on the device that holds the current directory the user is in no need to run it as root ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 2.29723 s, 935 MB/s real 0m2.303s user 0m0.000s sys 0m1.930s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 0.861939 s, 2.5 GB/s real 0m0.868s user 0m0.000s sys 0m0.861s ========== tidy up remove testfile ========== real 0m3.292s user 0m0.007s sys 0m2.906s

indeed the card now was running warmer… but nothing of concern. (concern would be beyond 70C == too hot to touch)

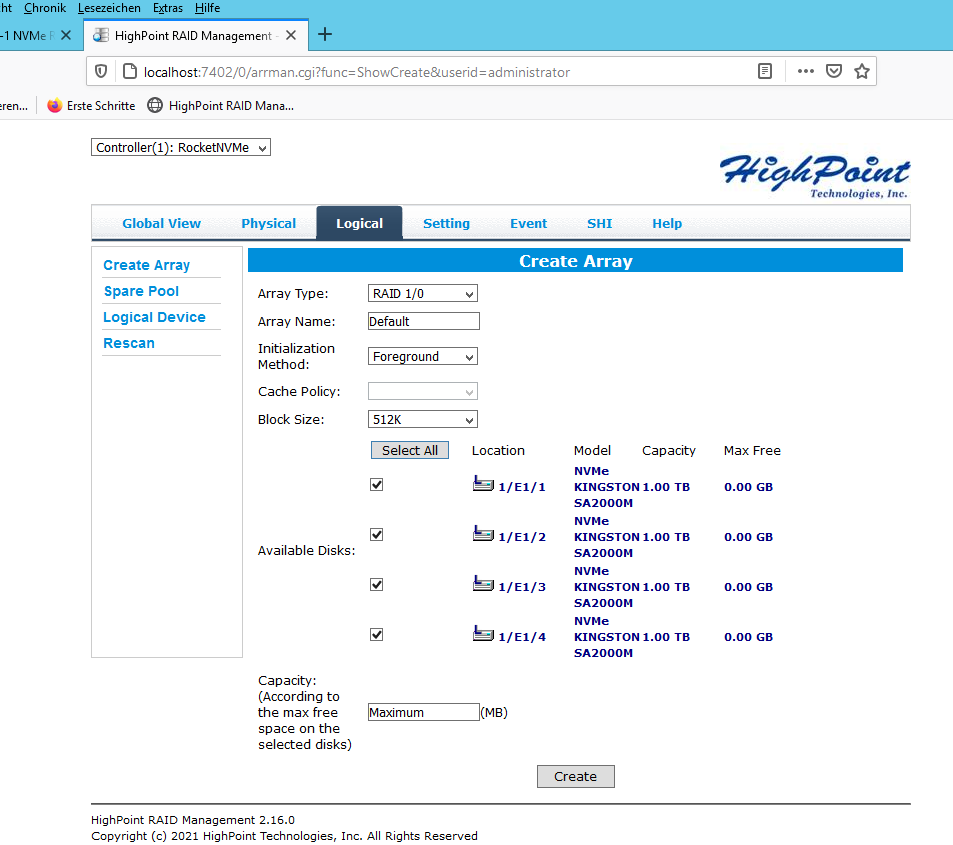

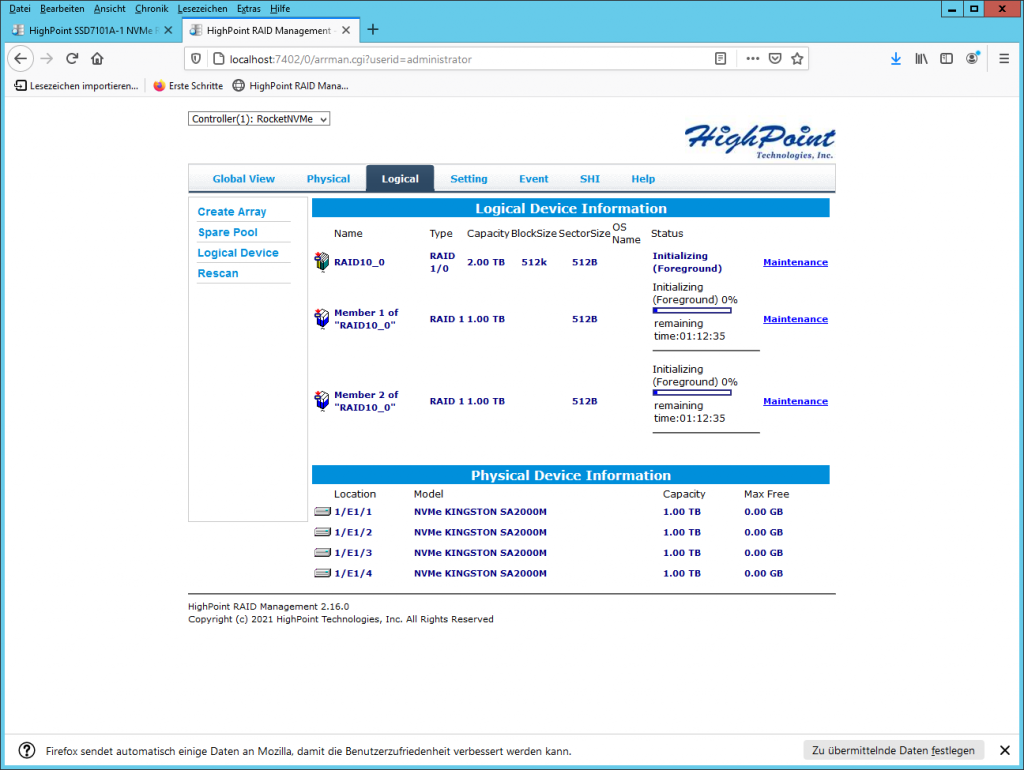

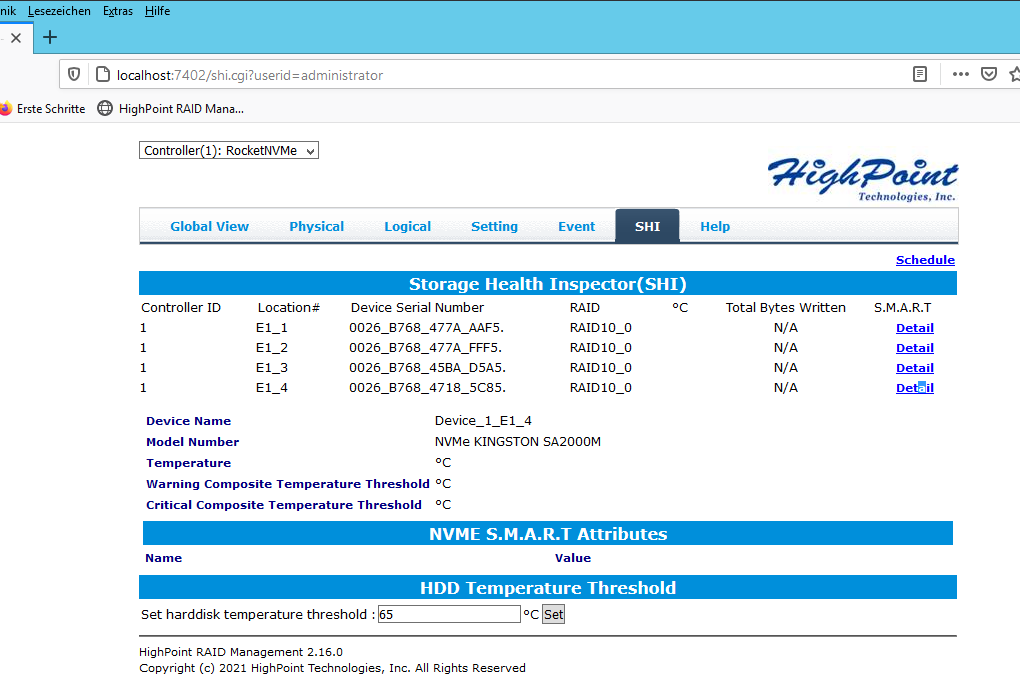

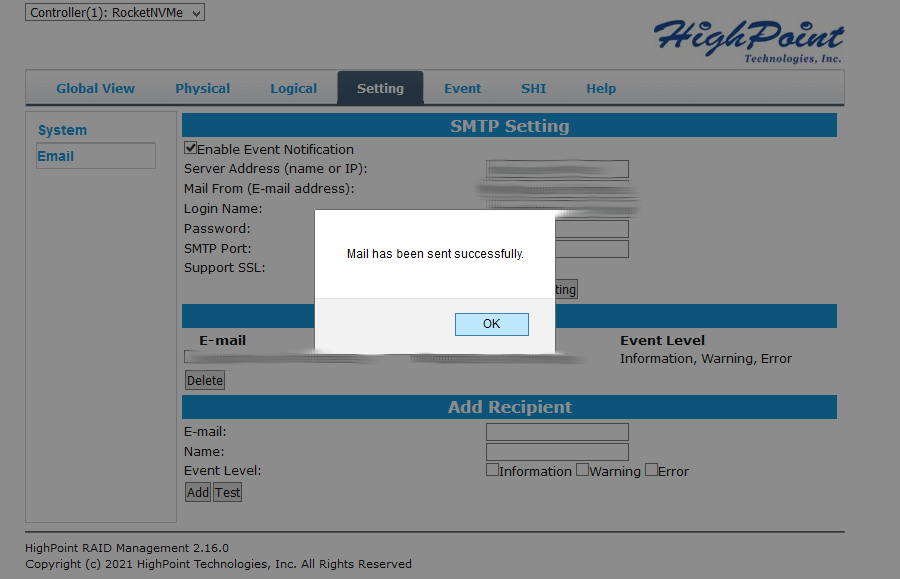

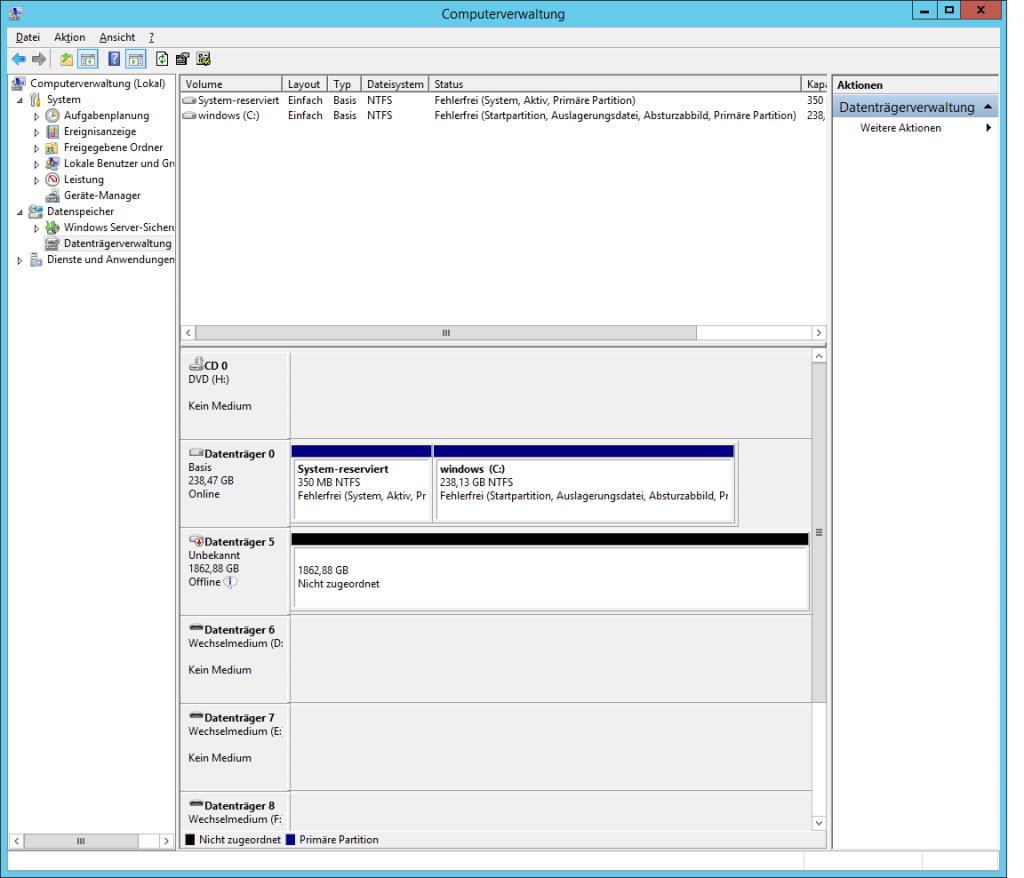

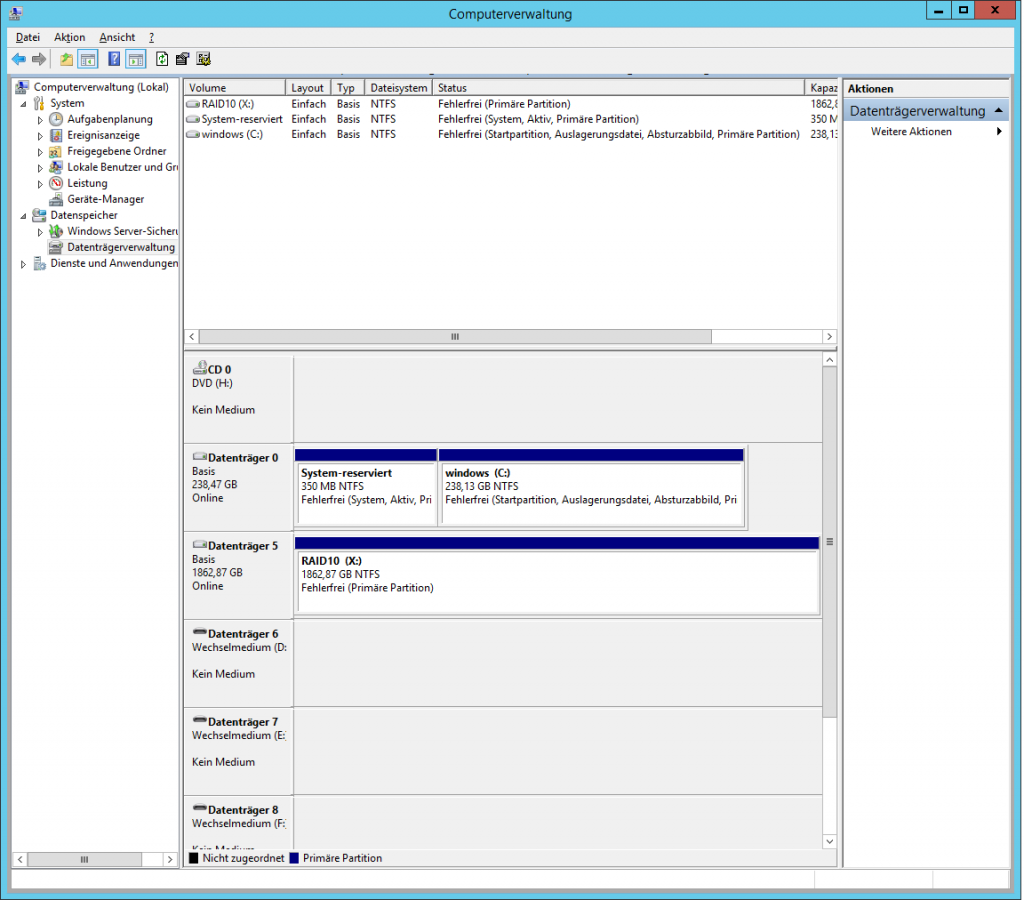

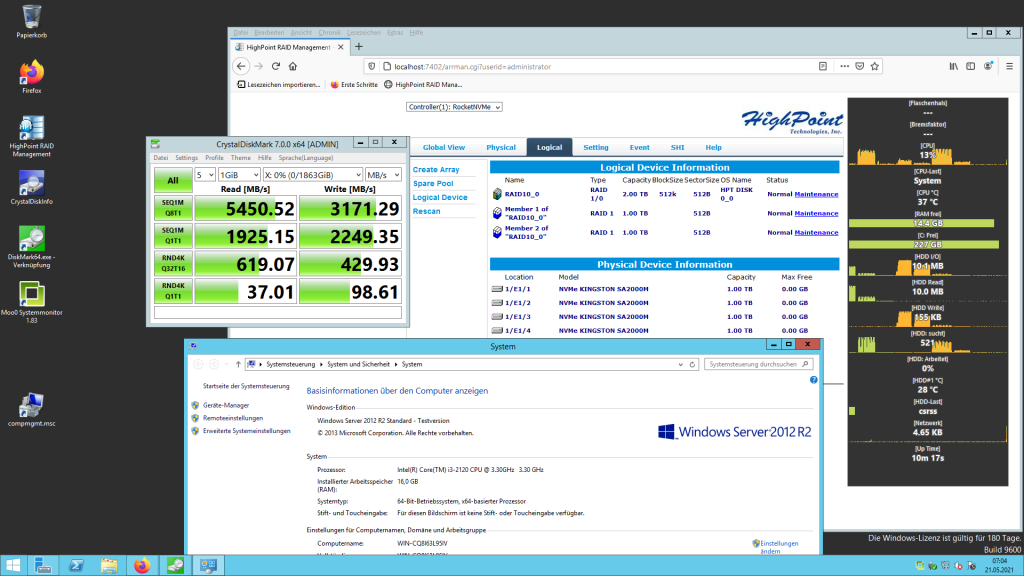

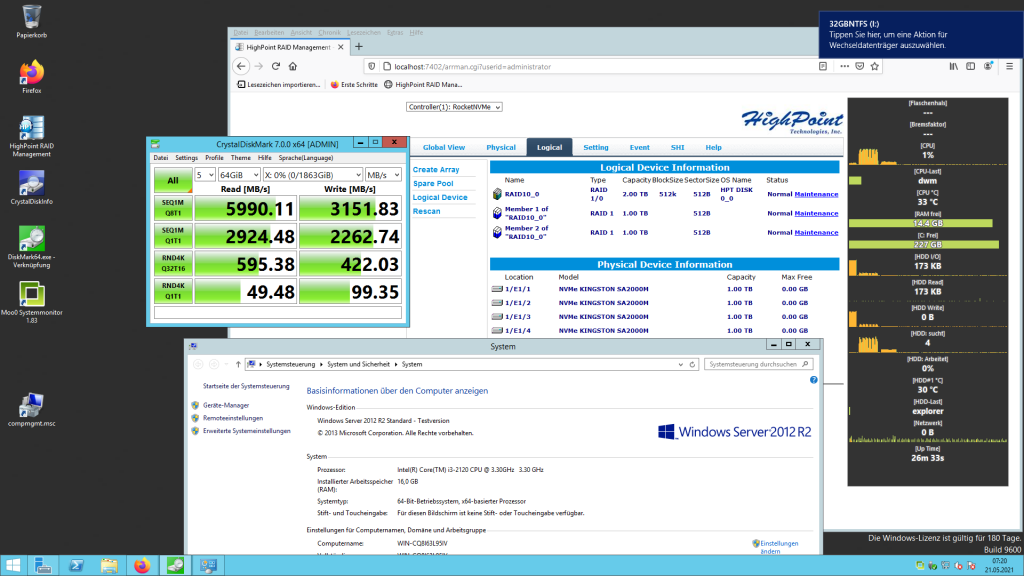

now to the Windows Server 2012 R2 benchmarks:

as this card is intended to upgrade such an server and speed up it’s file based database X-D

amazing RAID10 (as expected speeds) of 2x a Kingston NVMe (which has read speed of ~2 GByte/sec)

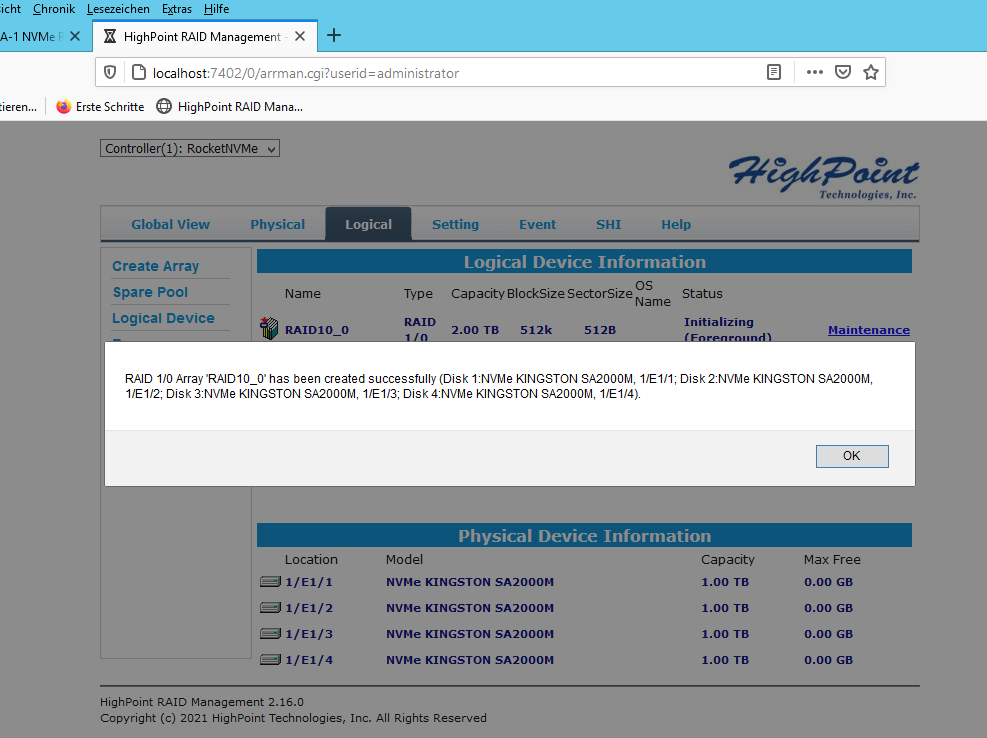

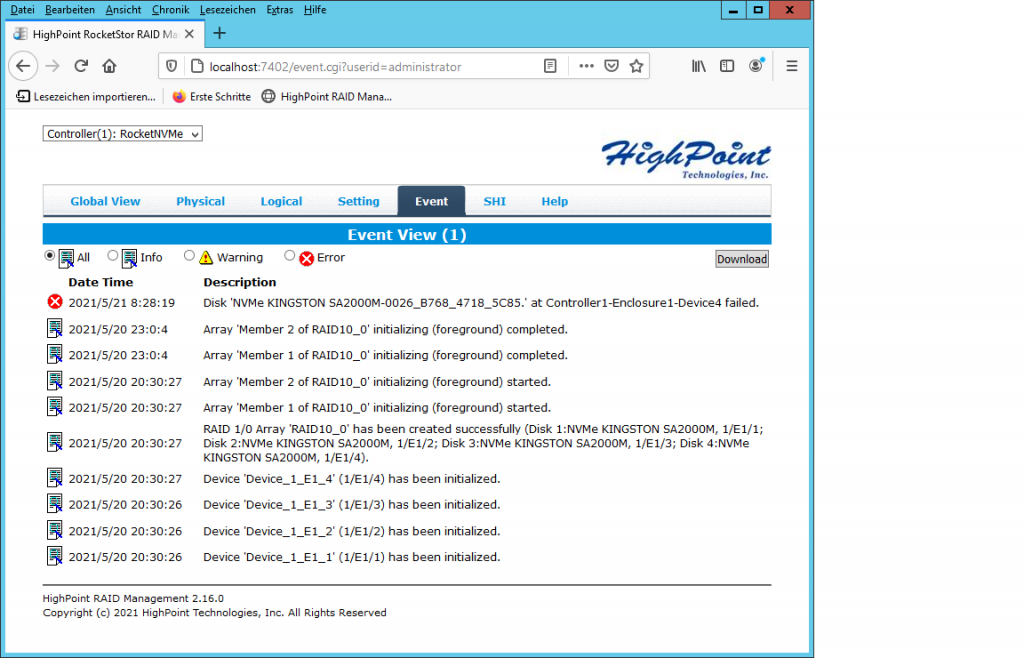

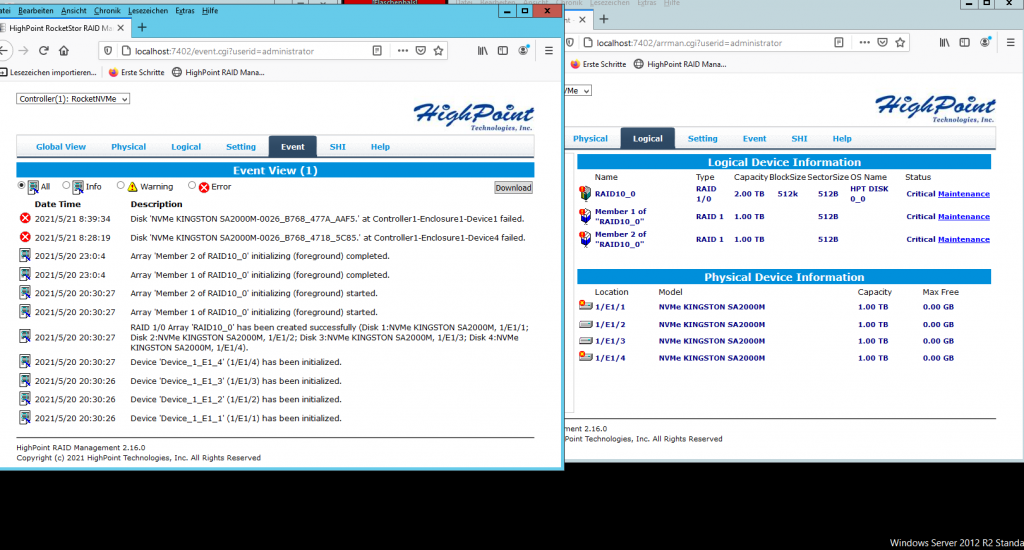

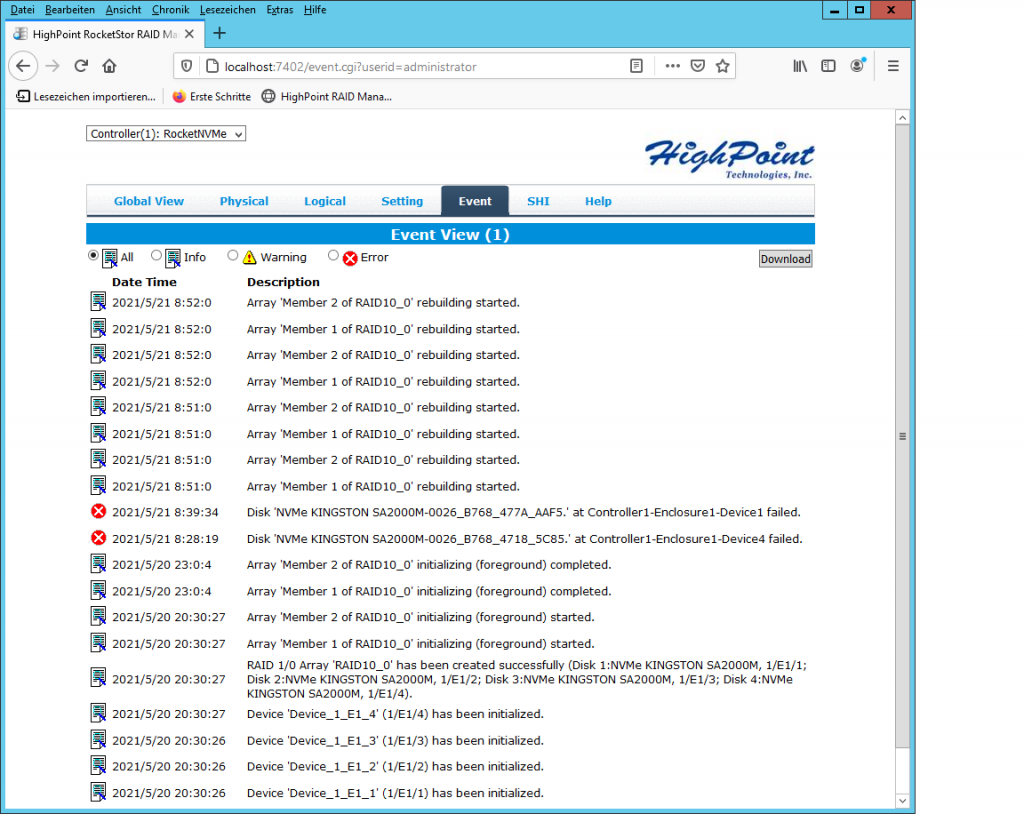

but when running ATTO Benchmark things went wrong… one NVMe was reported to have failed… shortly after…

a second NVMe was reported to have failed…

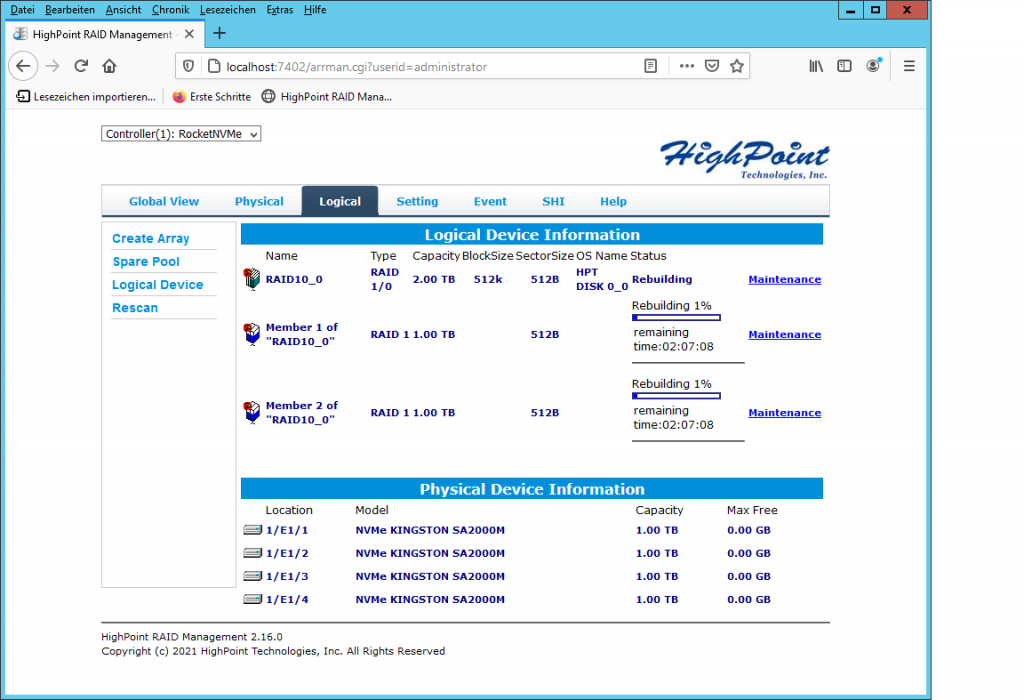

after a reboot… it starts rebuilding the RAID10… so it’s not the NVMes who have failed… but the RAID card who got out of sync.

argh HighPoint… this does not look like a RAID card that a admin should trust critical data to 🙁 (at least not under Windows Server 2012 R2 )

how to realize NVMe RAID10?

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!