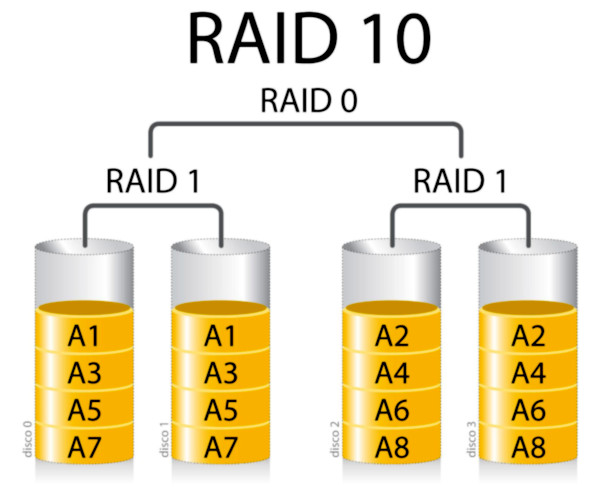

raid10 combines the speed of raid0 with the resilience of raid1.

watch out: shingled hdd are not good for RAID!

setup of GNU Linux mdadm software RAID10 is actually pretty straight forward: no need to do any partitioning.

# tested on hostnamectl; Operating System: Debian GNU/Linux 11 (bullseye) Kernel: Linux 5.10.0-8-amd64 Architecture: x86-64 su - root; # hardware requirement: 4x identical 4 TB harddisks # (e.g. Hitachi HGST Ultrastar 7K4000) lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 3.6T 0 disk sdb 8:16 0 3.6T 0 disk sdc 8:32 0 3.6T 0 disk sdd 8:48 0 3.6T 0 disk apt update # needed apt install mdadm # optional apt install lm-sensors hddtemp smartmontools systemctl start smartd /etc/init.d/kmod start # optional: keep an eye on cpu & hd temps sensors-detect # YES, YES, YES.... # script that runs a bunch of health tests and shows temp of all harddisks cat /scripts/harddisk_iteration.sh #!/bin/bash echo "=== iterate over all harddisks in the system: ===" for x in {a..z} do if test $(ls /dev |grep sd$x |wc -l) != 0; then echo "===== /dev/sd$sd$x ====="; hddtemp /dev/sd$sd$x if smartctl -l scterc,70,70 /dev/sd$sd$x > /dev/null ; then echo "is good for raid (not shingled)"; else echo "no good for raid, shingled harddisk, sue vendor"; fi; smartctl -l error /dev/sd$sd$x; echo ""; smartctl -H /dev/sd$sd$x; fi done # make mount point mkdir /media/user/md0 mdadm --create /dev/md0 --level=10 --raid-devices=4 /dev/sd[a-d] # watch temps of sensors while raid builds watch -n 2 'cat /proc/mdstat; sensors' # when raid build is complete # format and label it mkfs.ext4 -L "raid10" /dev/md0 # mount it mount /dev/md0 /media/user/md0 # so non-root-user can actually access and use it chown -R user: /media/user/md0 # :) run a basic harddisk benchmark cat /scripts/bench/bench_harddisk.sh #!/bin/bash echo "=== harddisk sequential write and read bench v1 ===" echo "starting test on the device that holds the current directory the user is in" echo "no need to run it as root" echo "" echo "========== writing 3GB of zeroes ==========" time dd if=/dev/zero of=./testfile bs=3G count=1 oflag=direct echo "========== reading 6GB of zeroes ==========" time dd if=./testfile bs=3GB count=1 of=/dev/null echo "========== tidy up remove testfile ==========" rm -rf ./testfile; # run sequential write/read benchmark cd /media/user/md0 time /scripts/bench/bench_harddisk.sh === harddisk sequential write and read bench v1 === starting test on the device that holds the current directory the user is in no need to run it as root ========== writing 3GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 13.3767 s, 161 MB/s real 0m13.393s user 0m0.000s sys 0m2.464s ========== reading 6GB of zeroes ========== 0+1 records in 0+1 records out 2147479552 bytes (2.1 GB, 2.0 GiB) copied, 8.1213 s, 264 MB/s real 0m8.129s user 0m0.000s sys 0m1.487s ========== tidy up remove testfile ========== real 0m21.641s user 0m0.002s sys 0m4.068s # another python based harddisk benchmark # which tests handling of small files # for whatever reason wants to run as root (actually no good) time /scripts/bench/bench_harddisk_small_files.py create test folder: 0.00346207618713 sh: 1: sudo: not found create files: 9.60848593712 sh: 1: sudo: not found rewrite files: 1.95726704597 sh: 1: sudo: not found read linear: 0.54031085968 sh: 1: sudo: not found read random: 0.626809120178 sh: 1: sudo: not found delete all files: 3.57898211479 sh: 1: sudo: not found real 0m16.336s <- that is pretty quick actually (compared to kingston ssd + i5) user 0m3.537s sys 0m11.636s echo "=== print nice overview over all harddisks and filesystems: ===" lsblk -o "NAME,MAJ:MIN,RM,SIZE,RO,FSTYPE,MOUNTPOINT,UUID" === print nice overview over all harddisks and filesystems: === NAME MAJ:MIN RM SIZE RO FSTYPE MOUNTPOINT UUID sda 8:0 0 3.6T 0 linux_raid_member 0816d6e1-be69-1f26-fac6-xxxxxxxxxxxx └─md0 9:0 0 7.3T 0 ext4 /media/user/md0 25eec7f4-304c-4dfa-862b-xxxxxxxxxxxx sdb 8:16 0 3.6T 0 linux_raid_member 0816d6e1-be69-1f26-fac6-xxxxxxxxxxxx └─md0 9:0 0 7.3T 0 ext4 /media/user/md0 25eec7f4-304c-4dfa-862b-xxxxxxxxxxxx sdc 8:32 0 3.6T 0 linux_raid_member 0816d6e1-be69-1f26-fac6-xxxxxxxxxxxx └─md0 9:0 0 7.3T 0 ext4 /media/user/md0 25eec7f4-304c-4dfa-862b-xxxxxxxxxxxx sdd 8:48 0 3.6T 0 linux_raid_member 0816d6e1-be69-1f26-fac6-xxxxxxxxxxxx └─md0 9:0 0 7.3T 0 ext4 /media/user/md0 25eec7f4-304c-4dfa-862b-xxxxxxxxxxxx # note that of the 4x 4TB RAID10 not 8TB but 7.3TB can be used (-8.75%) # :) # congratz! :) # do 10sec of the happy dance! :)

Links:

warning: the dd based benchmark described here: https://www.sebastien-han.fr/blog/2014/10/10/ceph-how-to-test-if-your-ssd-is-suitable-as-a-journal-device/

is DATA DESTRUCTIVE so have a backup or nothing important on that harddisk!

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!