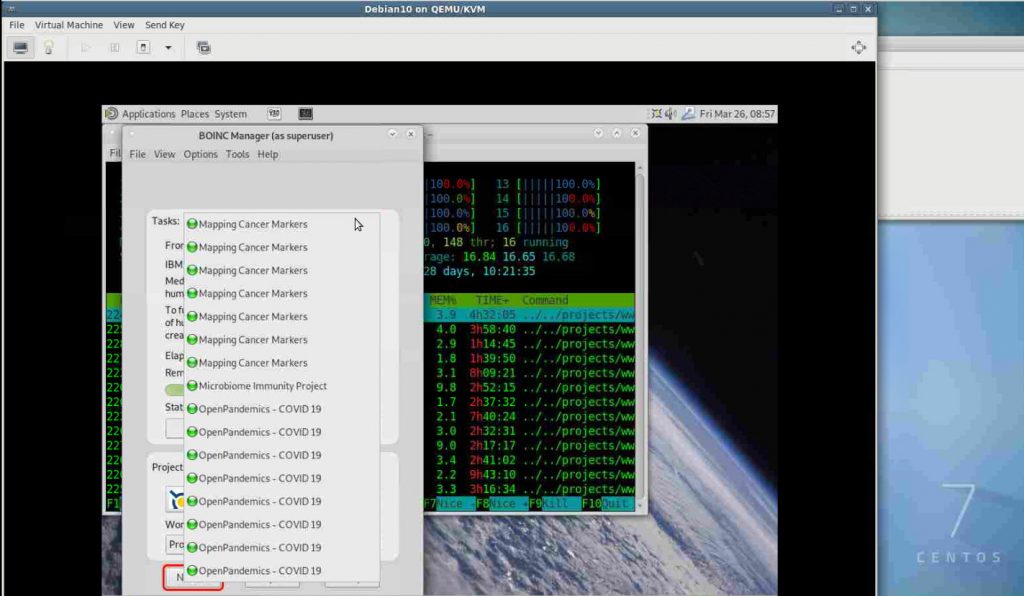

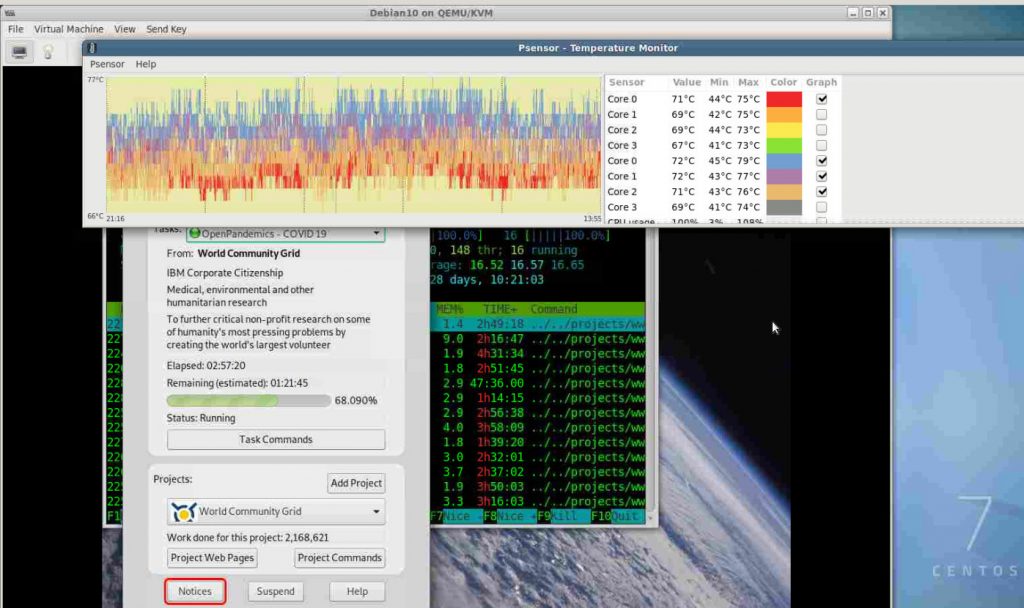

update: with more or less CPU usage this device uses like 6kWh per day (rough estimate over 30 day measurement)

it runs pretty quiet even on 100% CPU load 😊👍🏻🖖🏻🍻

got it cheap on eBay and wanted to take it for a test-drive.

pretty impressed how quiet that beast runs.

run with ideal-linux.de usb stick.

cpu benchmark and noise levels:

It is running a 100% CPU load test since 10min now and the noise and heat are still pretty good.

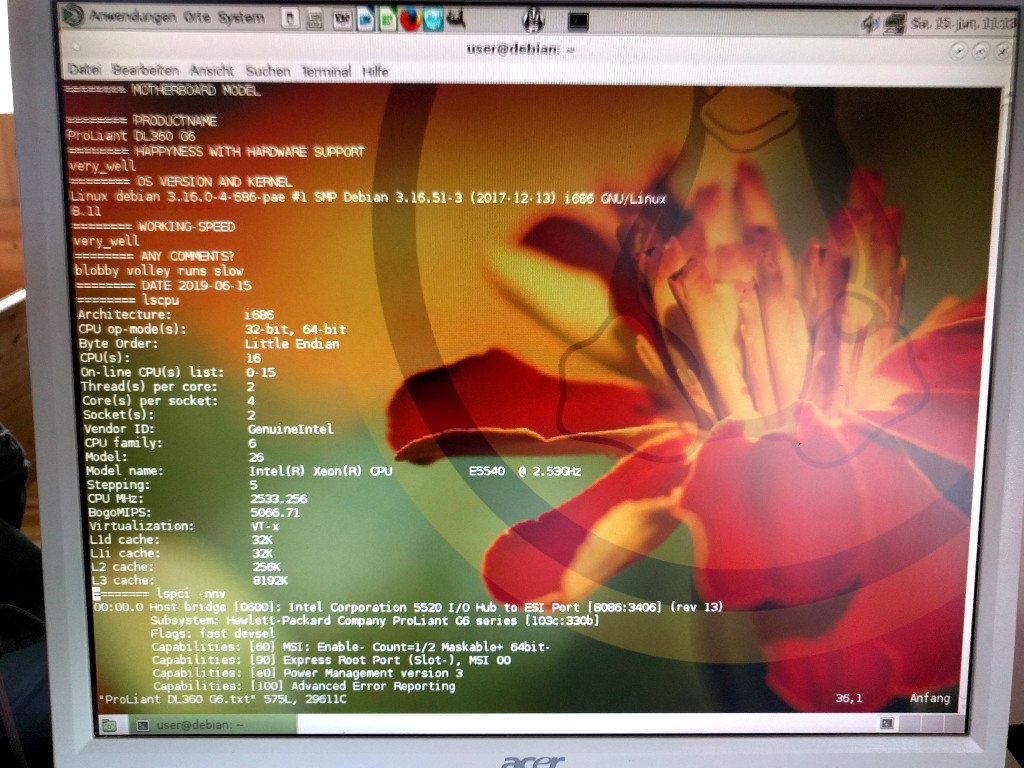

here are some pictures.

it currently has 2x CPUs (Xeon E5540@2.53Ghz) with 4x real cores and each with 2x threads makes, 2x4x2 = 16 threads.

for cpu bench i use time and sysbench.

and this thing is fast…

HP ProLiant with 2x E5540 @ 2.53GHz, bogomips : 5066.66, completes test in real 0m3.304s

Intel(R) Core(TM) i5-4200U CPU @ 1.60GHz, bogomips : 4589.16, real 0m10.039s

(so you can say i5@1.6GHz is 3x times slower than E5540@2.53GHz)

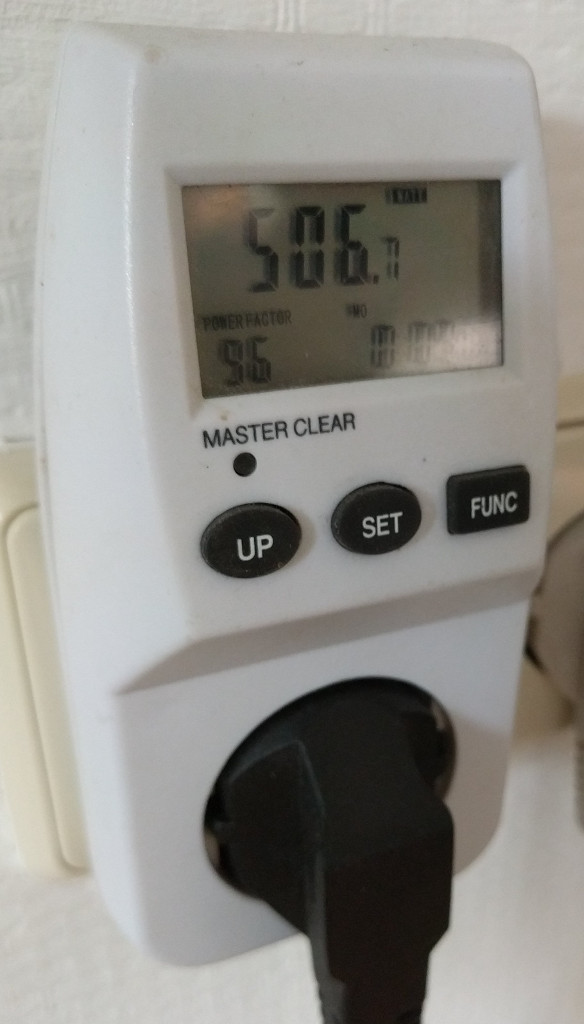

power consumption/watt usage:

this was measured using only one power supply

and without harddisks. so you can expect maybe another +10 Watts per harddisk (max 4x SAS so at least +40 Watts)

off: 15 Watts

startup: 220 Watts

on and idle: 170 Watts

on and 100% CPU load on all cores: 500 Watts (one power supply is only rated at 460 Watts… so you should definitely not run 100% CPU loads on one power supply permanently)

even under full load running since 10min straight, it is still a good noise level.

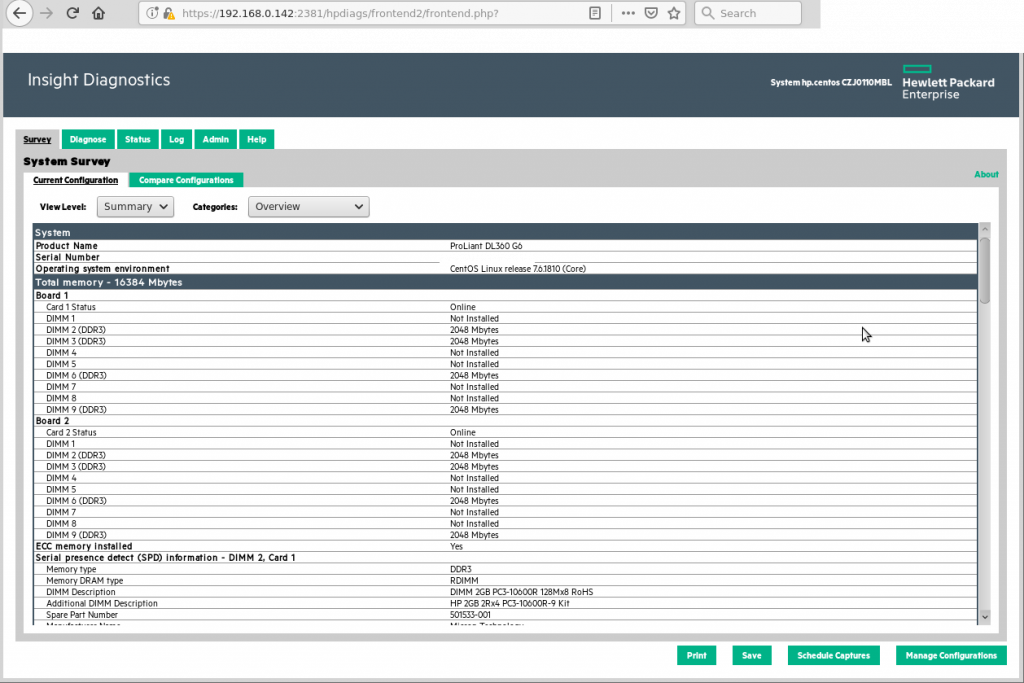

hardware report:

detailed info about the hardware created with hardware detection script.

ideal-linux.de hardware detection report ProLiant DL360 G6.txt

RAID?

lsscsi [0:0:0:0] storage HP P410i 6.60 - [0:1:0:0] disk HP LOGICAL VOLUME 6.60 /dev/sda

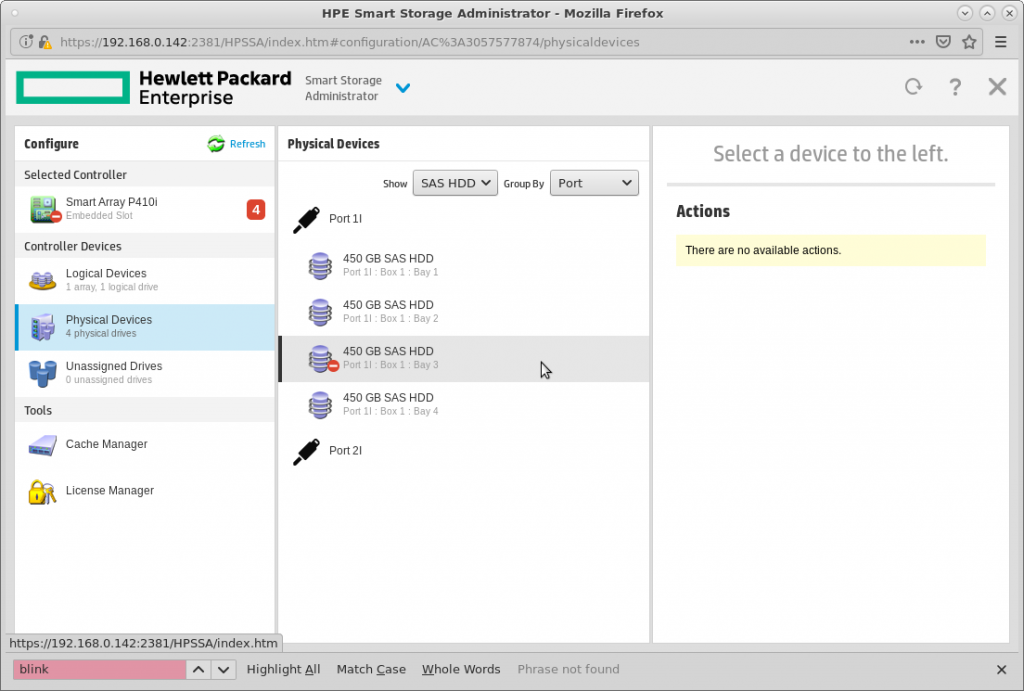

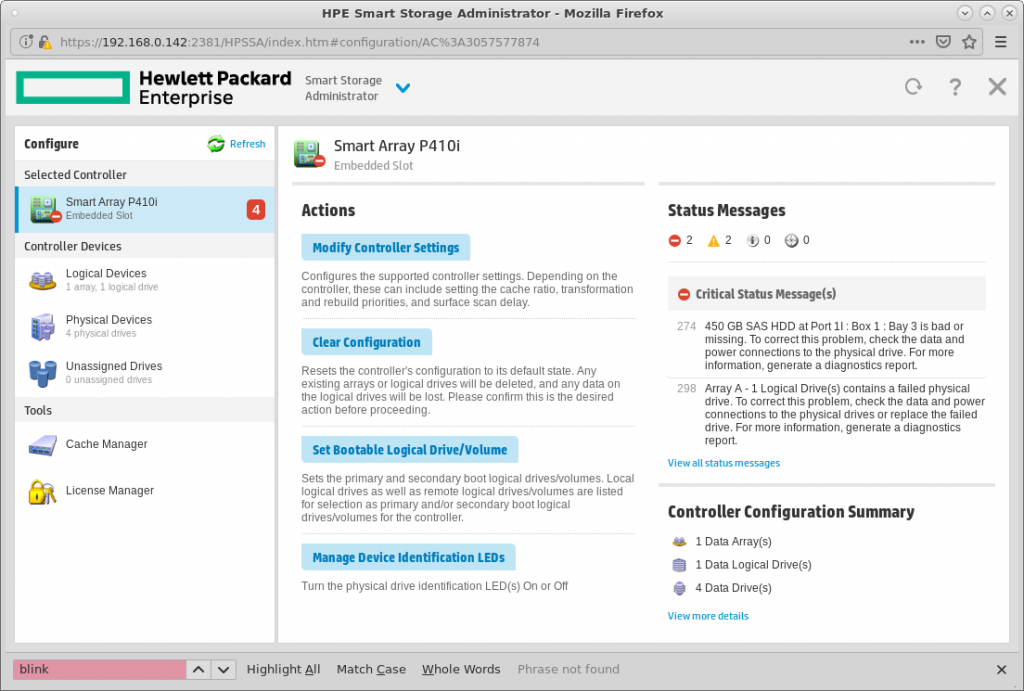

yes it has 4x SAS slots connected to an: Hewlett-Packard Company Smart Array G6 controllers [103c:323a] (rev 01) Subsystem: Hewlett-Packard Company Smart Array P410i [103c:3245]

find documentation here: https://support.hpe.com/hpsc/doc/public/display?docId=emr_na-c01677092

specs: https://h20195.www2.hpe.com/v2/gethtml.aspx?docname=c04111713

|

Operating Systems (OS) supported

|

|

was able to Install “CentOS-7-x86_64-Minimal-1810” which detected controller out of the box (GOOD JOB GUYS!)

… but the performance seems to be not soooo good.

# info about controller cat /proc/scsi/scsi Attached devices: Host: scsi0 Channel: 00 Id: 00 Lun: 00 Vendor: HP Model: P410i Rev: 6.60 Type: RAID ANSI SCSI revision: 05 Host: scsi0 Channel: 01 Id: 00 Lun: 00 Vendor: HP Model: LOGICAL VOLUME Rev: 6.60 Type: Direct-Access ANSI SCSI revision: 05 # bench time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 93.201 s, 69.1 MB/s real 1m33.209s # comparison with SATA attached SSD cat /proc/scsi/scsi Attached devices: Host: scsi0 Channel: 00 Id: 00 Lun: 00 Vendor: ATA Model: SanDisk SDSSDHP2 Rev: 6RL Type: Direct-Access ANSI SCSI revision: 05 Host: scsi4 Channel: 00 Id: 00 Lun: 00 Vendor: Intenso Model: External USB 3.0 Rev: 1405 Type: Direct-Access ANSI SCSI revision: 06 Host: scsi5 Channel: 00 Id: 00 Lun: 00 Vendor: WD Model: 10EACS External Rev: 1.65 Type: Direct-Access ANSI SCSI revision: 04 # bench time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 30.0913 s, 214 MB/s real 0m30.203s

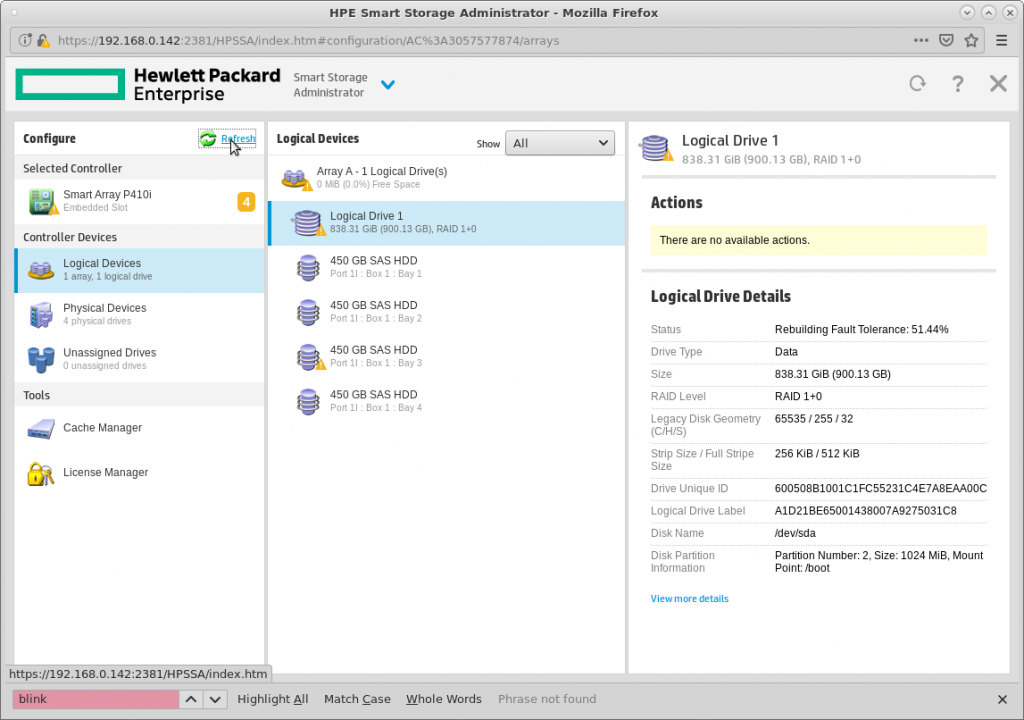

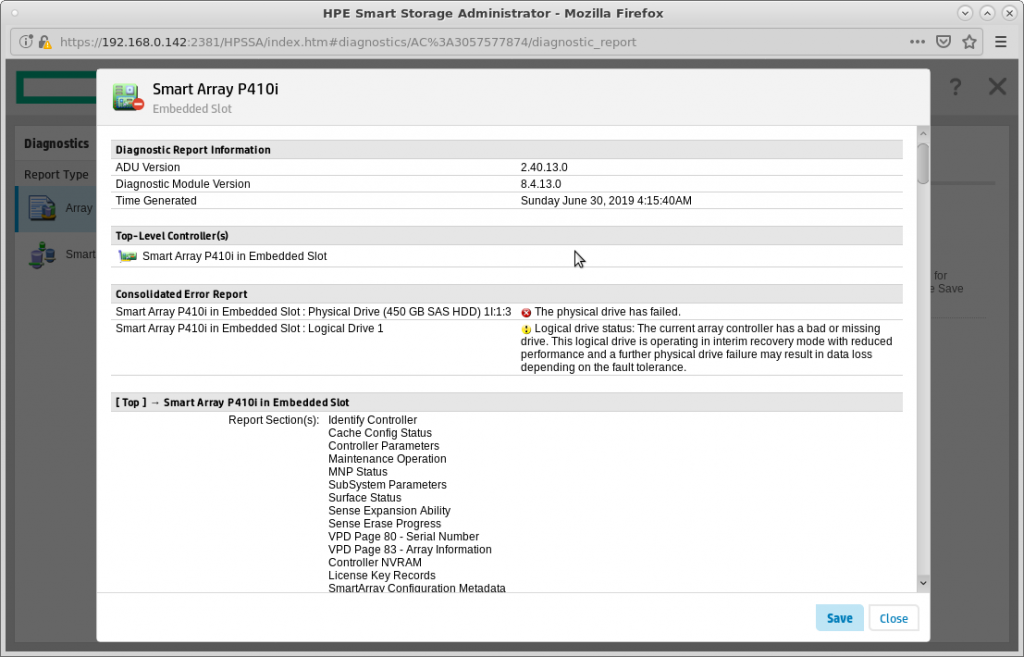

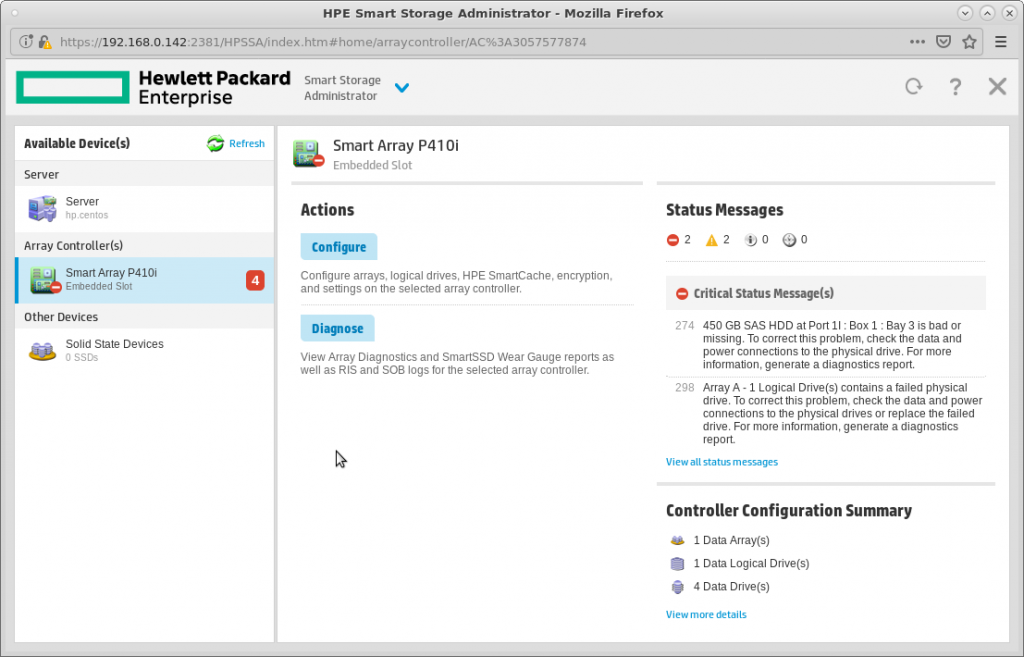

management software for raid?

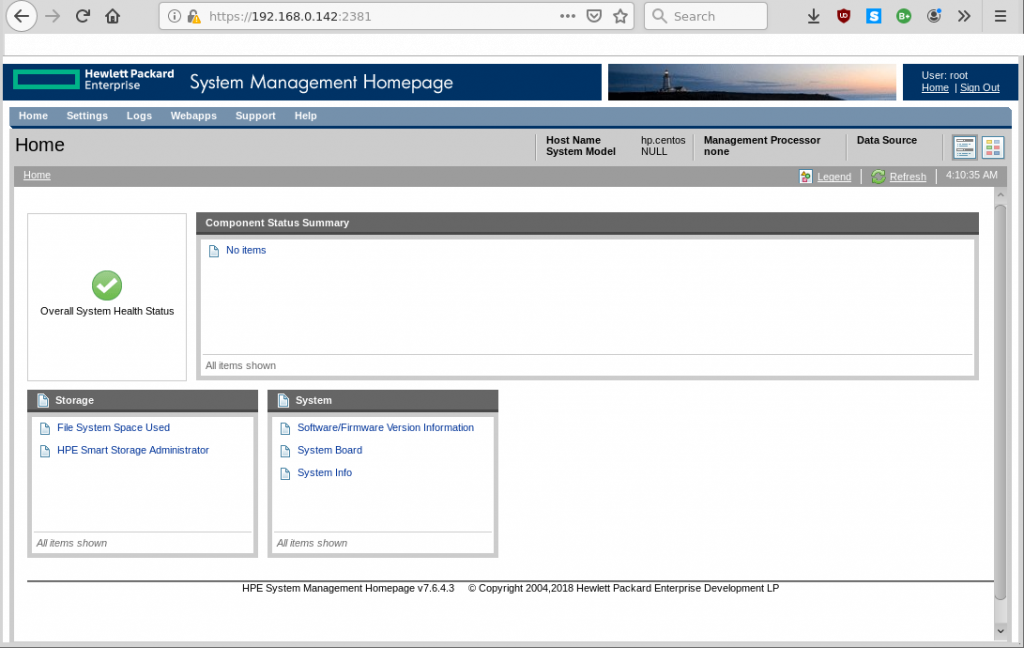

yes is available! (thanks hp) and works.

“mdadm has an excellent ‘monitor’ mode which will send an email when a problem is detected in any array (more about that later)” (src)

this is exactly what people need:

- good raid harddisk speed

- notification/mail on drive failure

- notification/mail if smart data get’s bad

some LSI Raid controllers (1068e) allow you to switch to “direct SATA mode” where instead of managing the raid by the controller gives linux direct access to every single drive and use mdadm (software raid) to circumvent any driver issues.

“The HPE Smart Storage Administrator (HPE SSA) is a web-based application that helps you configure, manage, diagnose, and monitor HPE ProLiant Smart Array Controllers”

https://support.hpe.com/hpsc/swd/public/detail?swItemId=MTX_1e8750e02ce442dcba0c3ac7fc#tab1

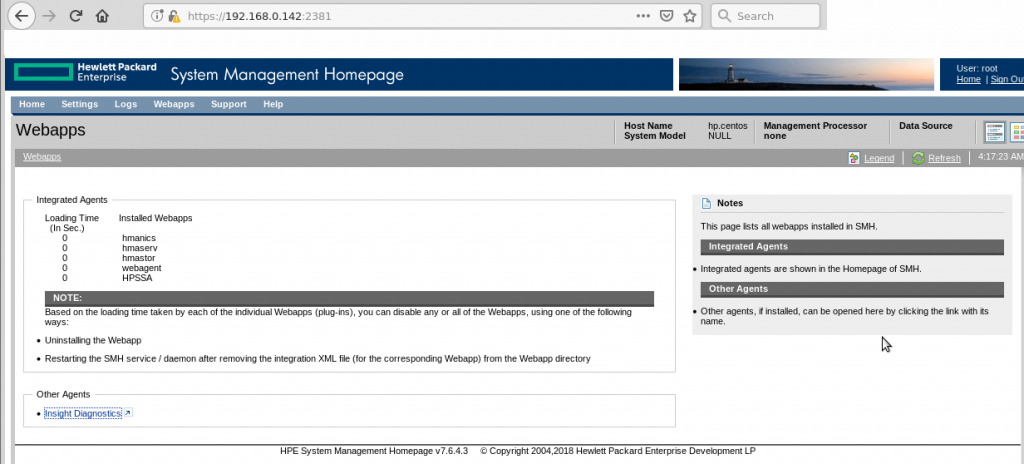

how to install some raid and hp management software for hp servers:

Guide to installing HP System Management Tools CentOS 7

follow this guide -> https://www.centos.org/forums/viewtopic.php?t=55506

# find your release cat /etc/redhat-release CentOS Linux release 7.6.1810 (Core) rpm --query centos-release centos-release-7-6.1810.2.el7.centos.x86_64 # what is there? and what is what? Available Packages amsd.x86_64 1.4.0-3066.82.centos7 mcp Name : amsd Arch : x86_64 Version : 1.4.0 Release : 3066.82.centos7 Size : 928 k Repo : mcp Summary : Agentless Management Service URL : http://www.hpe.com/go/proliantlinux License : MIT and BSD Description : This package contains the helper daemon that provides information for iLO5 : embedded health and alerting. hponcfg.x86_64 5.4.0-0 mcp Available Packages Name : hponcfg Arch : x86_64 Version : 5.4.0 Release : 0 Size : 58 k Repo : mcp Summary : Hponcfg - HP Lights-Out Online Configuration Utility URL : http://www.hp.com/go/ilo License : Proprietary Description : Hponcfg is a command line utility that can be used to configure iLO from with in the operating system without requiring a reboot of the server. ssa.x86_64 3.40-3.0 mcp Installed Packages Name : ssa Arch : x86_64 Version : 3.40 Release : 3.0 Size : 31 M Repo : installed From repo : mcp Summary : Smart Storage Administrator URL : http://www.hpe.com License : See ssa.license Description : The Smart Storage Administrator is the storage management : application suite for Proliant Servers. ssacli.x86_64 3.40-3.0 mcp Installed Packages Name : ssacli Arch : x86_64 Version : 3.40 Release : 3.0 Size : 39 M Repo : installed From repo : mcp Summary : Command Line Smart Storage Administrator URL : http://www.hpe.com License : See ssacli.license Description : The Command Line Smart Storage Administrator is the storage management : application suite for Proliant Servers. ssaducli.x86_64 3.40-3.0 mcp Installed Packages Name : ssaducli Arch : x86_64 Version : 3.40 Release : 3.0 Size : 11 M Repo : installed From repo : mcp Summary : Smart Storage Diagnostics and SmartSSD Wear Gauge Utility CLI URL : http://www.hpe.com License : See ssadu.license Description : The Smart Storage Diagnostics and SmartSSD Wear Gauge Utility is : the diagnostic suite for Proliant Server Storage.

where is the web based raid management interface?

# after starting /usr/sbin/ssa -start # it is supposed to be accessible via: # Access the HPE System Management Homepage from the client browser. http://192.168.0.142:2301 # Secure URL: https://192.168.0.142:2381/ # ... but it did not even start... # it is not running lsof -i -P # you can generate yourself a nice overview ssa -diag -txt -f ./ReportFile -v Smart Storage Administrator 3.40.3.0 2018-12-06 Command Line Functions: -local : Run ssa application ssa -local Run the application in English. ssa -local [ -lang languageCode ] Run the application in the language specified by languageCode. Supported languageCode/languages are: en - English (default) ja - Japanese de - German es - Spanish fr - French it - Italian pt - Portuguese ru - Russian zh - Simplified Chinese -diag : Create Diagnostic (ADU) Report. Syntax: ssa -diag -f reportFileName [ -v ] Generate a complete report. Save to reportFileName. -v : Verbose output. ssa -diag -txt [ -f textReportFileName ] [ -v ] Generate a text report. -f textReportFileName : Save output to textReportFileName. -v : Verbose output. -ssd : Create SSD Wear Report. Syntax: ssa -ssd -f reportFileName [ -v ] Generate a complete report. Save to reportFileName. -v : Verbose output. ssa -ssd -txt [ -f textReportFileName ] [ -v ] Generate a text report. -f textReportFileName : Save output to textReportFileName. -v : Verbose output. -logs : Collect controller serial and basecode logs. Syntax: ssa -logs -f outputFileName Save log contents to outputFileName. -start : Start the SSA daemon and enable SMH access -stop : Stop the SSA daemon and disable SMH access

what do you get for all this hassle?

libstoragemgmt

did not compile for me under CentOS7

https://libstorage.github.io/libstoragemgmt-doc/

https://github.com/libstorage/libstoragemgmt/blob/master/INSTALL

Today system administrators utilizing open source solutions have no way to programmatically manage their storage hardware in a vendor neutral way. This project’s main goal is to provide this needed functionality. To facilitate management automation, ease of use and take advantage of storage vendor supported features which improve storage performance and space utilization.

LibStorageMgmt is:

- Community open source project.

- Free software under the GNU Lesser General Public License.

- A long term stable C API.

- A set of bindings for common languages with initial support for python.

- Supporting these actions:

- List storage pools, volumes, access groups, or file systems.

- Create and delete volumes, access groups, file systems, or NFS exports.

- Grant and remove access to volumes, access groups, or initiators.

- Replicate volumes with snapshots, clones, and copies.

- Create and delete access groups and edit members of a group.

- List Linux local SCSI/ATA/NVMe disks.

- Control IDENT/FAULT LED of local disk via SES(SCSI Enclosure Service).

yum search hpsa libstoragemgmt-hpsa-plugin.noarch : Files for HPE SmartArray support for libstoragemgmt yum info libstoragemgmt-hpsa-plugin.noarch Available Packages Name : libstoragemgmt-hpsa-plugin Arch : noarch Version : 1.6.2 Release : 4.el7 Size : 38 k Repo : base/7/x86_64 Summary : Files for HPE SmartArray support for libstoragemgmt URL : https://github.com/libstorage/libstoragemgmt License : LGPLv2+ Description : The libstoragemgmt-hpsa-plugin package contains the plugin for HPE : SmartArray storage management via hpssacli. cat /proc/scsi/scsi Attached devices: Host: scsi0 Channel: 00 Id: 00 Lun: 00 Vendor: HP Model: P410i Rev: 6.60 Type: RAID ANSI SCSI revision: 05 Host: scsi0 Channel: 01 Id: 00 Lun: 00 Vendor: HP Model: LOGICAL VOLUME Rev: 6.60 Type: Direct-Access ANSI SCSI revision: 05 dmesg|grep hpsa [ 3.159603] hpsa 0000:03:00.0: can't disable ASPM; OS doesn't have ASPM control [ 3.160368] hpsa 0000:03:00.0: Logical aborts not supported [ 3.160483] hpsa 0000:03:00.0: HP SSD Smart Path aborts not supported [ 3.235533] scsi host0: hpsa [ 3.237074] hpsa can't handle SMP requests [ 3.284365] hpsa 0000:03:00.0: scsi 0:0:0:0: added RAID HP P410i controller SSDSmartPathCap- En- Exp=1 [ 3.284556] hpsa 0000:03:00.0: scsi 0:0:1:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284744] hpsa 0000:03:00.0: scsi 0:0:2:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284987] hpsa 0000:03:00.0: scsi 0:0:3:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.286826] hpsa 0000:03:00.0: scsi 0:0:4:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.287064] hpsa 0000:03:00.0: scsi 0:0:5:0: masked Enclosure PMCSIERA SRC 8x6G enclosure SSDSmartPathCap- En- Exp=0 [ 3.287258] hpsa 0000:03:00.0: scsi 0:1:0:0: added Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 3.287517] hpsa can't handle SMP requests [ 402.141492] hpsa 0000:03:00.0: scsi 0:1:0:0: resetting logical Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 403.210199] hpsa 0000:03:00.0: device is ready. [ 403.224936] hpsa 0000:03:00.0: scsi 0:1:0:0: reset logical completed successfully Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 dmesg|grep scsi [ 3.235533] scsi host0: hpsa [ 3.284365] hpsa 0000:03:00.0: scsi 0:0:0:0: added RAID HP P410i controller SSDSmartPathCap- En- Exp=1 [ 3.284556] hpsa 0000:03:00.0: scsi 0:0:1:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284744] hpsa 0000:03:00.0: scsi 0:0:2:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284987] hpsa 0000:03:00.0: scsi 0:0:3:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.286826] hpsa 0000:03:00.0: scsi 0:0:4:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.287064] hpsa 0000:03:00.0: scsi 0:0:5:0: masked Enclosure PMCSIERA SRC 8x6G enclosure SSDSmartPathCap- En- Exp=0 [ 3.287258] hpsa 0000:03:00.0: scsi 0:1:0:0: added Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 3.288270] scsi 0:0:0:0: RAID HP P410i 6.60 PQ: 0 ANSI: 5 [ 3.289283] scsi 0:1:0:0: Direct-Access HP LOGICAL VOLUME 6.60 PQ: 0 ANSI: 5 [ 25.357713] scsi 0:0:0:0: Attached scsi generic sg0 type 12 [ 25.375918] sd 0:1:0:0: Attached scsi generic sg1 type 0 [ 402.141492] hpsa 0000:03:00.0: scsi 0:1:0:0: resetting logical Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 403.224936] hpsa 0000:03:00.0: scsi 0:1:0:0: reset logical completed successfully Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1

Ubuntu claims to have full driver support for this raid controller (untested)

but Debian and Fedora/CentOS/RedHat seems to work with this controller as well.

and as always… XFS is faster than ext4 X-D

maximum harddisk space seems to be pretty limited for 2.5″ (1TB) but pretty good for 3.5″ SAS (6TB!)

“For information. I have HP DL120 G7, P410. Few days ago I installed Hitachi (HGST) Ultrastar 7K6000 6TB 7200rpm 128MB HUS726060AL5214 0F22811 3.5″ SAS. This for backup, only 1 HDD in volume, RAID0. Work fine. P410 Firmware Version: 6.64. HDD Firmware Version: C7J0.” (src)

ipmi hardware monitoring tools?

# says already installed if you installed the raid drivers /sbin/hponcfg -h HP Lights-Out Online Configuration utility Version 5.4.0 Date 8/6/2018 (c) 2005,2018 Hewlett Packard Enterprise Development LP Firmware Revision = 2.25 Device type = iLO 2 Driver name = hpilo USAGE: hponcfg -? hponcfg -h hponcfg -m minFw hponcfg -r [-m minFw] hponcfg -b [-m minFw] hponcfg [-a] -w filename [-m minFw] hponcfg -g [-m minFw] hponcfg -f filename [-l filename] [-s namevaluepair] [-v] [-m minFw] [-u username] [-p password] hponcfg -i [-l filename] [-s namevaluepair] [-v] [-m minFw] [-u username] [-p password] -h, --help Display this message -? Display this message -r, --reset Reset the Management Processor to factory defaults -b, --reboot Reboot Management Processor without changing any setting -f, --file Get/Set Management Processor configuration from "filename" -i, --input Get/Set Management Processor configuration from the XML input received through the standard input stream. -w, --writeconfig Write the Management Processor configuration to "filename" -a, --all Capture complete Management Processor configuration to the file. This should be used along with '-w' option -l, --log Log replies to "filename" -v, --xmlverbose Display all the responses from Management Processor -s, --substitute Substitute variables present in input config file with values specified in "namevaluepairs" -g, --get_hostinfo Get the Host information -m, --minfwlevel Minimum firmware level -u, --username iLO Username -p, --password iLO Password # is iLO LAN interface connected and does it have an ip? /sbin/hponcfg -g HP Lights-Out Online Configuration utility Version 5.4.0 Date 8/6/2018 (c) 2005,2018 Hewlett Packard Enterprise Development LP Firmware Revision = 2.25 Device type = iLO 2 Driver name = hpilo Host Information: Server Name: hp.centos Server Serial Number: CZJ0110XXX # read out ilo settings to file /sbin/hponcfg -w /tmp/hp-ilo.txt HP Lights-Out Online Configuration utility Version 5.4.0 Date 8/6/2018 (c) 2005,2018 Hewlett Packard Enterprise Development LP Firmware Revision = 2.25 Device type = iLO 2 Driver name = hpilo Management Processor configuration is successfully written to file "/tmp/hp-ilo.txt" # modify ilo config file settings vim /tmp/hp-ilo.txt <!-- HPONCFG VERSION = "5.4.0" --> <!-- Generated 6/30/2019 15:22:34 --> <RIBCL VERSION="2.1"> <LOGIN USER_LOGIN="root" PASSWORD="root"> <DIR_INFO MODE="write"> <MOD_DIR_CONFIG> <DIR_AUTHENTICATION_ENABLED VALUE = "N"/> <DIR_LOCAL_USER_ACCT VALUE = "Y"/> <DIR_SERVER_ADDRESS VALUE = ""/> <DIR_SERVER_PORT VALUE = "636"/> <DIR_OBJECT_DN VALUE = ""/> <DIR_OBJECT_PASSWORD VALUE = ""/> <DIR_USER_CONTEXT_1 VALUE = ""/> <DIR_USER_CONTEXT_2 VALUE = ""/> <DIR_USER_CONTEXT_3 VALUE = ""/> </MOD_DIR_CONFIG> </DIR_INFO> <RIB_INFO MODE="write"> <MOD_NETWORK_SETTINGS> <SPEED_AUTOSELECT VALUE = "Y"/> <NIC_SPEED VALUE = "10"/> <FULL_DUPLEX VALUE = "N"/> <IP_ADDRESS VALUE = "192.168.1.123"/> <SUBNET_MASK VALUE = "255.255.255.0"/> <GATEWAY_IP_ADDRESS VALUE = "192.168.1.1"/> <DNS_NAME VALUE = "ILO9Q01MQ0755"/> <PRIM_DNS_SERVER value = "0.0.0.0"/> <DHCP_ENABLE VALUE = "N"/> <DOMAIN_NAME VALUE = ""/> <DHCP_GATEWAY VALUE = "Y"/> <DHCP_DNS_SERVER VALUE = "Y"/> <DHCP_STATIC_ROUTE VALUE = "Y"/> <DHCP_WINS_SERVER VALUE = "Y"/> <REG_WINS_SERVER VALUE = "Y"/> <PRIM_WINS_SERVER value = "0.0.0.0"/> <STATIC_ROUTE_1 DEST = "0.0.0.0" GATEWAY = "0.0.0.0"/> <STATIC_ROUTE_2 DEST = "0.0.0.0" GATEWAY = "0.0.0.0"/> <STATIC_ROUTE_3 DEST = "0.0.0.0" GATEWAY = "0.0.0.0"/> </MOD_NETWORK_SETTINGS> </RIB_INFO> <USER_INFO MODE="write"> </USER_INFO> </LOGIN> </RIBCL> # read in the values /sbin/hponcfg -f /tmp/hp-ilo.txt HP Lights-Out Online Configuration utility Version 5.4.0 Date 8/6/2018 (c) 2005,2018 Hewlett Packard Enterprise Development LP Firmware Revision = 2.25 Device type = iLO 2 Driver name = hpilo Integrated Lights-Out will reset at the end of the script. Please wait while the firmware is reset. This might take a minute Script succeeded

https://support.hpe.com/hpsc/swd/public/detail?swItemId=MTX_5ab6295f49964f16a699064f29#tab2

maxing out EEC RAM by upgrade?

with 2x CPUs you can put 18x8GByte EEC RAM Modules.

while 16x8GByte would also be 128GByte of plentiful RAM at ~ 300€

RDIMM maximum memory configurations

The following table lists the maximum memory configuration possible with 8 GB RDIMMs.

|

Rank

|

Single Processor

|

Dual Processor

|

|---|---|---|

|

Single-rank

|

72 GB

|

144 GB

|

|

Dual-rank

|

72 GB

|

144 GB

|

|

Quad-rank

|

48 GB

|

96 GB

|

src: support.hpe.com

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!