this is a very very basic sequential harddisk write test performed on host and guest.

there are multiple ways, how you could improve the performance:

- Use fixed size disks

- Allocate RAM and CPUs wisely

- Install the Guest Additions

- enable host I/O caching? (see below why or why not)

note: while Oracle’s VirtualBox supports a variety of harddisk formats, only it’s native vdi format has proven to be stable. (if that has changed, please comment)

so when moving from VMare or Hyper-V to VirtualBox YOU SHOULD CONVERT ALL YOUR HARDDISK FILES TO THE NATIVE VDI FORMAT!

(Acronis Boot iso can help here or any bootable linux with dd)

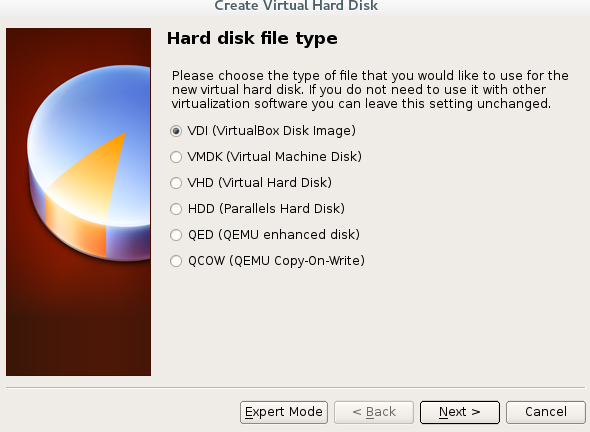

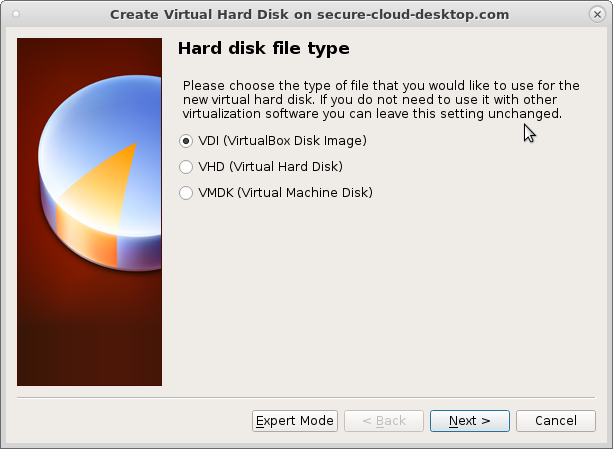

older versions of VirtualBox supported those harddisk file formats:

In the (up to date latest) version 5.22 support was reduced to 3 formats: (VHD = Hyper-V, VMDK = VMware)

as said NEVER use VHD or VMDK in production, only VDI!

# virtualbox host: (16GByte of RAM, using ext4) hostnamectl Operating System: CentOS Linux 7 (Core) Kernel: Linux 4.XX Architecture: x86-64 # virtualbox version: vboxmanage --version 5.2.XX # sequential write benchmark directly on host time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 43.0703 s, 150 MB/s real 0m43.159s user 0m0.000s sys 0m4.412s # virtualbox guest (2GByte of RAM, using xfs, centos7, DISABLED i/o host caching) hostnamectl Operating System: CentOS Linux 7 (Core) Kernel: Linux 4.20.X (very latest) Architecture: x86-64 # with vbox guest additions modinfo vboxguest filename: /lib/modules/4.20.X/kernel/drivers/virt/vboxguest/vboxguest.ko license: GPL description: Oracle VM VirtualBox Guest Additions for Linux Module author: Oracle Corporation name: vboxguest vermagic: 4.20.X SMP mod_unload modversions # sequential write benchmark on virtualbox guest time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 186.81 s, 34.5 MB/s # so you can calc # sequential harddisk write # (largely uncached on the fist run) # is -77% X-D # now let's enable host i/o caching# virtualbox guest (using xfs, centos7, ENABLED i/o host caching) # sequential write benchmark on virtualbox guest time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 70.8442 s, 90.9 MB/s real 1m11.059s user 0m0.000s sys 0m25.019s # WOW! this almost 3x trippled the sequential harddisk performance! # +263% improvement in comparison to no i/o host caching # still a -60% slower than directly on hardware

So what is the CATCH with i/o host caching?

in other words: why is it not enabled per default, if it gives almos 3x times the harddisk (write) performance?

“it basically boils down to safety over performance”

update: 2020-11: had i/o-host caching turned on for a lot of windows and linux based virtualbox vms (largely Win7 64Bit, but also Debian 10, CentOS7) and not a single problem occured, = new default setting, because it gives great performance benefits, after all, virtual-harddisk-speed is (of course) many % slower than direct host-harddisk-speed, fast real- and virtual-harddisks speeds are crucial for fast and fluid performing IT (no holdups, fast page loads etc.).

Here are the disadvantages of having that setting on (which these

points may not apply to you):

- Delayed writing through the host OS cache is less secure.

- When the guest OS writes data, it considers the data written even though it has not yet arrived on a physical disk.

- If for some reason the write does not happen (power failure, host crash), the likelihood of data loss increases.

- Disk image files tend to be very large.

- Caching them can therefore quickly use up the entire host OS cache. Depending on the efficiency of the host OS caching, this may slow down the host immensely, especially if several VMs run at the same time.

- For example, on Linux hosts, host caching may result in Linux delaying all writes until the host cache is nearly full and then writing out all these changes at once,

- possibly stalling VM execution for minutes. (unresponsive for minutes until cache written)

- possibly stalling VM execution for minutes. (unresponsive for minutes until cache written)

- This can result in I/O errors in the guest as I/O requests time out there.

- Physical memory is often wasted as guest operating systems typically have their own I/O caches

- which may result in the data being cached twice (in both the guest and the host caches) for little effect.

“If you decide to disable host I/O caching for the above reasons

VirtualBox uses its own small cache to buffer writes, but no read caching since this is typically already performed by the guest OS.

In addition, VirtualBox fully supports asynchronous I/O for its virtual SATA, SCSI and SAS controllers through multiple I/O threads.”

Thanks, Craig (from this src)

AHA!

because one is a curious fellow… now the sequential dd read benchmark X-D with i/o host caching enabled.

as stated by Craig, it should not improve read performance a lot.

# again: rerun of the benachmark inside guest, ENABLED i/o host caching time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 62.6793 s, 103 MB/s real 1m2.831s user 0m0.000s sys 0m25.277s # now read benachmark inside guest ENABLED i/o host caching time dd if=/root/testfile bs=1024k count=6143 of=/dev/null 6143+0 records in 6143+0 records out 6441402368 bytes (6.4 GB) copied, 2.42869 s, 2.7 GB/s real 0m2.431s <- TWO SECONDS X-D, this is definately 100% RAM Cache speed user 0m0.009s sys 0m2.414s # now read benachmark inside guest DISABLED i/o host caching # write speeds were again, pretty slow # read speads: time dd if=/root/testfile bs=1024k count=6143 of=/dev/null 6143+0 records in 6143+0 records out # 1st run 6441402368 bytes (6.4 GB) copied, 3.49446 s, 1.8 GB/s, almost 1GByte/s less! # 2nd run 6441402368 bytes (6.4 GB) copied, 3.84024 s, 1.7 GB/s # 3rd run 6441402368 bytes (6.4 GB) copied, 3.5566 s, 1.8 GB/s real 0m3.505s user 0m0.004s sys 0m2.575s

… so it seems i/o host caching also improves/increases sequential read performance.

more harddisk benchmark results:

ProLiant DL360 G6 with

lshw -class system -class processor hp.centos description: Rack Mount Chassis product: ProLiant DL360 G6 (1234554321) vendor: HP serial: CZJ0110MBL width: 64 bits capabilities: smbios-2.7 dmi-2.7 smp vsyscall32 configuration: boot=hardware-failure-fw chassis=rackmount family=ProLiant sku=1234554321 uuid=31323334-3535-435A-4A30-3131304D424C *-cpu:0 description: CPU product: Intel(R) Xeon(R) CPU E5540 @ 2.53GHz lshw -class tape -class disk -class storage -short H/W path Device Class Description ========================================================= /0/100/1/0 scsi0 storage Smart Array G6 controllers /0/100/1/0/1.0.0 /dev/sda disk 900GB LOGICAL VOLUME # try to get exact RAID controller model cat /proc/scsi/scsi Attached devices: Host: scsi0 Channel: 00 Id: 00 Lun: 00 Vendor: HP Model: P410i Rev: 6.60 Type: RAID ANSI SCSI revision: 05 Host: scsi0 Channel: 01 Id: 00 Lun: 00 Vendor: HP Model: LOGICAL VOLUME Rev: 6.60 Type: Direct-Access ANSI SCSI revision: 05 dmesg|grep hpsa [ 3.159603] hpsa 0000:03:00.0: can't disable ASPM; OS doesn't have ASPM control [ 3.160368] hpsa 0000:03:00.0: Logical aborts not supported [ 3.160483] hpsa 0000:03:00.0: HP SSD Smart Path aborts not supported [ 3.235533] scsi host0: hpsa [ 3.237074] hpsa can't handle SMP requests [ 3.284365] hpsa 0000:03:00.0: scsi 0:0:0:0: added RAID HP P410i controller SSDSmartPathCap- En- Exp=1 [ 3.284556] hpsa 0000:03:00.0: scsi 0:0:1:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284744] hpsa 0000:03:00.0: scsi 0:0:2:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.284987] hpsa 0000:03:00.0: scsi 0:0:3:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.286826] hpsa 0000:03:00.0: scsi 0:0:4:0: masked Direct-Access NETAPP X421_HCOBD450A10 PHYS DRV SSDSmartPathCap- En- Exp=0 [ 3.287064] hpsa 0000:03:00.0: scsi 0:0:5:0: masked Enclosure PMCSIERA SRC 8x6G enclosure SSDSmartPathCap- En- Exp=0 [ 3.287258] hpsa 0000:03:00.0: scsi 0:1:0:0: added Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 3.287517] hpsa can't handle SMP requests [ 402.141492] hpsa 0000:03:00.0: scsi 0:1:0:0: resetting logical Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 [ 403.210199] hpsa 0000:03:00.0: device is ready. [ 403.224936] hpsa 0000:03:00.0: scsi 0:1:0:0: reset logical completed successfully Direct-Access HP LOGICAL VOLUME RAID-1(+0) SSDSmartPathCap- En- Exp=1 # run sequential benchmark # this is probably not a good result, one disk is defect? time dd if=/dev/zero of=/root/testfile bs=3G count=3 oflag=direct dd: warning: partial read (2147479552 bytes); suggest iflag=fullblock 0+3 records in 0+3 records out 6442438656 bytes (6.4 GB) copied, 96.0937 s, 67.0 MB/s real 1m36.102s

liked this article?

- only together we can create a truly free world

- plz support dwaves to keep it up & running!

- (yes the info on the internet is (mostly) free but beer is still not free (still have to work on that))

- really really hate advertisement

- contribute: whenever a solution was found, blog about it for others to find!

- talk about, recommend & link to this blog and articles

- thanks to all who contribute!